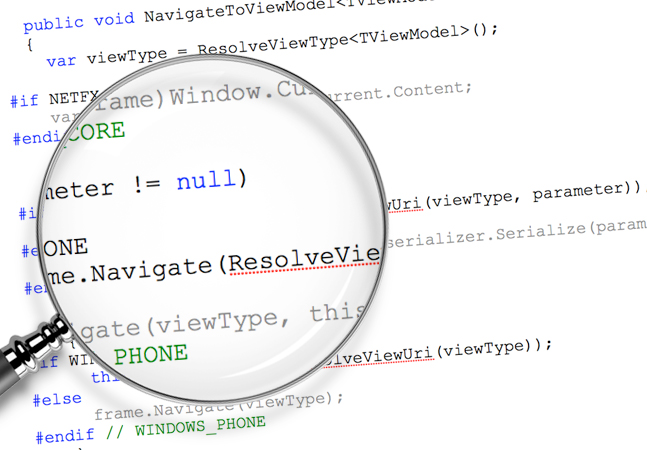

You wouldn't really name a variable "x," would you?

But it happens.

And it's not just "x." It's "usrdb," "item," "foo," "test," "blah" and more. These are real variable names that have been encountered in production-grade codebases.

"And that's just scary!" says Adrienne Braganza-Tacke.

The senior developer advocate at Cisco has become a specialist in naming variables, which is actually more complicated and important than it may seem at first. Luckily, she's bringing her expertise to the Visual Studio Live! developer conference in Chicago, where she'll present a session titled "Variables of the Veracious Variety: How to Better Name your Variables" on April 30, 2024.

The very existence of such a presentation brings to mind the famous quote by Phil Karlton: "There are only two hard things in computer science: cache invalidation and naming things."

Attendees of the introductory/intermediate 75-minute session won't be learning anything about cache invalidation, but they will be learning about the importance of naming things and how to do it better, being promised to:

- Understand what makes variable naming difficult

- Recognize what makes a variable clear, concise, and consistent

- Explore variable naming patterns for almost any type of variable

We caught up with Braganza-Tacke to get a preview of her session, and to learn more about the importance of naming variables in software development.

VisualStudioLive!: What inspired you to present a session on naming variables?

Braganza-Tacke: I tend to talk about topics that I think every software developer should be knowledgeable about and variable naming is one of them. What really inspired me to put together a whole talk, though, was just how many bad variable names there are in real-world applications. x, usrdb, item, foo, test, blah ... these are real variable names I've encountered in production-grade codebases. And that's just scary! As with most of my talks, there's a lot of common software development sense that's not so common. That's why I'm putting my decade of experience, team-tested trials, and actionable advice together into one nice talk for anyone that wants to level up their variable naming!

What are some common challenges developers face when naming variables, and why is it often considered one of the harder tasks in computer science?

Typically, it's really hard to get the essence of a concept down to a single word or very short phrase; how do you convey a customer that has surpassed the maximum number of refund transactions allowed in a month as clearly and concisely as possible? Now, take that thought experiment and consider that different words mean different things to different people. The same words can even have different meanings or connotations within different contexts. Add onto that the global nature of software development, where English is not always the first language of the developer.

"Developers are basically asked to choose variable names that convey a concept that is also explicitly clear to their team, can't be confused with other concepts, and makes sense within the context of the codebase they are working in."

"Developers are basically asked to choose variable names that convey a concept that is also explicitly clear to their team, can't be confused with other concepts, and makes sense within the context of the codebase they are working in."

Adrienne Braganza-Tacke, Senior Developer Advocate, Cisco

Taking all of this into consideration, developers are basically asked to choose variable names that convey a concept that is also explicitly clear to their team, can't be confused with other concepts, and makes sense within the context of the codebase they are working in. While it's definitely more of an art than an exact science, these challenges tend to make good variable naming a difficult task for developers.

In your view, what are the key characteristics that make a variable name clear, concise, and consistent? Could you provide an example of a well-named variable and explain why it's effective?

I'd answer, but I'd hate to spoil my talk 😉 Come listen to it at Visual Studio Live instead!

Can you share a before-and-after example where you improved a variable name and what your thought process and rationale were behind the change?

We actually go through a few examples in my talk where we improve it in real-time with the audience! In essence, we take a very vague, ambiguous and concept-void variable name and slowly iterate on it to become more concise, meaningful and context-filled.

What is one example guideline or best practice you would recommend for developers to follow when naming variables to ensure clarity and consistency?

When naming variables that are integers, be explicit! For example, say you're naming a variable that holds the amount of delay (in milliseconds) for a tooltip. Instead of tooltipDelay, a better name could be tooltipDelayInMilliseconds. When you're dealing with a number or count of something, say so! For example, if you have a variable that holds how many accounts are currently being requested, numberOfAccounts or numberOfRequestedAccounts is much more explicit than just accounts. Yes, the variable names are a bit longer. However, the cognitive load you remove for that extra effort to make them explicit can make a world of a difference when reading, re-reading and understanding code.

For developers who are just starting out, what strategies or exercises can they use to develop their skills in naming variables effectively? How can they get better at this seemingly simple yet complex task?

Go back to some code you've written in the past and see if there are variables that you can improve. Are there parts that you re-read or have trouble understanding? Would a more meaningful variable name help? If so, change those variables! Another thing you can do: Moving forward, resist the temptation to use "easy" variables. As you write code, take some time to think of a more meaningful variable name than temp, user, item, or other common concepts. Instead, consider the domain you are in, what the code is doing, and what concepts you are trying to convey. You can think of it as making your code a bit more wordy or verbose, but in reality, you're making it more readable and robust. And you're helping your future self when you have to re-read in a few months; you'll know exactly what you meant by being so explicit!

Note: Those wishing to attend the conference can save hundreds of dollars by registering early, according to the event's pricing page. "Register for VSLive! Chicago by the March 1 Super Early Bird deadline to save up to $400 and secure your seat for intensive developer training in Chicago!" said the organizer of the developer conference, which is presented by the parent company of Visual Studio Magazine.

Posted by David Ramel on 02/20/20240 comments

Creating AI-powered applications is a natural fit for the Microsoft Azure cloud, but sorting out all the different options for the backing data stores can seem daunting.

All developers are looking for an edge to build applications that are data-driven and harness the power AI, and Azure Databases provide a range of options for secure, scalable and highly available data applications using all the latest languages.

Microsoft's "Databases on Azure" guidance sums up many of the different options: "Develop AI-ready, trusted applications with a range of relational and non-relational databases and keep your focus on application development and innovation, not database management. Intelligence built-in helps automate management tasks like high availability, scaling, and query performance tuning so your applications are always on and ready. Trust Azure for built-in data security controls, broad regional coverage, and more compliance offerings to help strengthen your security posture and support your business growth."

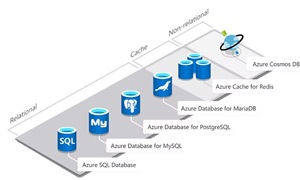

[Click on image for larger view.] Azure Databases (source: Microsoft).

[Click on image for larger view.] Azure Databases (source: Microsoft).

Some of the options Azure offers include :

Purpose-Built Cloud Databases

- Azure Cosmos DB: Fast, distributed NoSQL and relational database at any scale -- Develop high-performance applications of any size or scale with a fully managed and serverless distributed database supporting open source PostgreSQL, MongoDB, and Apache Cassandra as well as Java, Node.JS, Python, and .NET.

- Azure SQL Database: Flexible, fast, and elastic SQL database for your new apps -- Build apps that scale with a fully managed and intelligent SQL database built for the cloud. Grow your applications with near-limitless storage capacity and responsive serverless compute, backed by AI-based advanced security.

Open Source Databases

- Azure Database for PostgreSQL: Fully managed, intelligent, and scalable PostgreSQL database -- Innovate faster with a fully managed PostgreSQL database service for open-source developers building scalable and secure enterprise-ready apps.

- Azure Database for MySQL: Scalable, open-source MySQL database -- Develop apps with a fully managed community MySQL database service delivering high availability, mission-critical performance, and elastic scaling for open-source developers building mobile and web apps.

- Azure Database for MariaDB: Fully managed, community MariaDB -- Build with a fully managed community MariaDB database service delivering high availability and elastic scaling for open-source developers.

- Azure Cache for Redis: Distributed, in-memory, scalable caching -- Accelerate your application's data layer with an in-memory data store that offers increased speed and scale for web applications, backed by the open-source Redis server.

Sorting out all those options and choosing the right tech for your particular application is so important it's the topic of a keynote address at the upcoming Visual Studio Live! developer conference slated for March in Las Vegas.

The keynote, titled "Developing Modern Data Applications Using the Power of AI with Azure Databases," will show you when and where to use the family of Azure Databases including Azure SQL Database, Azure Database for PostgreSQL, Azure Cosmos DB, and Azure Database for MySQL.

"You will see how to build applications to power AI applications driven by data and also AI technologies that speed up your development and improve quality with Microsoft Copilot technologies," said Bob Ward, one of the keynote presenters. The Principal Architect for Microsoft Azure Data will be presenting along with Davide Mauri, Principal Product Manager at Microsoft.

"You will also see various new options on how to use new techniques to access data with less code and integrate your data with Microsoft Fabric," Ward said.

Attendees are promised to learn:

- When and where to use various Azure Database choices

- How to build-driven AI applications and how to use Copilot experiences to improve the developer experience

- New experiences on how to access your data to save time and code

We recently caught up with Ward to learn more about his March 6 keynote in a short Q&A.

VSLive! You have such an impressive background. What inspired you to first get involved in data-driven development?

Ward: My first data development projects were on Unix systems writing C code with Ingres (the predecessor of today's PostgreSQL). I was working on healthcare applications, and I saw from that moment how important data is to any business or application. And I found out it was helpful to not just learn to build great code but learn the database side as well to write efficient queries and understand how a database worked. Learning these skills together helped really give me so many career opportunities.

With various Azure Database options like Azure SQL Database, Azure Database for PostgreSQL, Cosmos DB and Azure Database for MySQL, how does a developer determine the best choice for a particular data-driven application?

I realize looking at this lineup can feel a bit overwhelming. We will talk more about this in the keynote, but here is a way to look at it. If you like SQL Server and this is your preferred choice, then Azure SQL Database is perfect for you. But I have some customers who prefer an open source database and still want that "relational" feel. So, I tell him if you want to go that route, we have excellent options for managed cloud databases such as Azure PostgreSQL or MySQL per your choice and experiences. Azure Cosmos DB has traditionally been thought of as a "NoSQL" database. If you love working with native JSON data, you can easily use Cosmos DB. Cosmos DB now also offers experiences including integration with PostgreSQL and MongoDB.

"The great thing about all of these database options is that they take advantage of the power of Azure. This includes experiences for your favorite APIs and managed security, scalability, and availability. But most importantly all of them can be used to build new AI data-driven applications integrating with services like Azure OpenAI. We will talk about this in the keynote and have demos for you to see it in action."

"The great thing about all of these database options is that they take advantage of the power of Azure. This includes experiences for your favorite APIs and managed security, scalability, and availability. But most importantly all of them can be used to build new AI data-driven applications integrating with services like Azure OpenAI. We will talk about this in the keynote and have demos for you to see it in action."

Bob Ward, Principal Architect, Microsoft Azure Data, Microsoft

The great thing about all of these database options is that they take advantage of the power of Azure. This includes experiences for your favorite APIs and managed security, scalability, and availability. But most importantly all of them can be used to build new AI data-driven applications integrating with services like Azure OpenAI. We will talk about this in the keynote and have demos for you to see it in action.

In your personal experience, can you share one example of how GitHub Copilot and Chat improve the development process and enhance quality?

I can't wait to show some of the innovations here for developers. For me already the capabilities for GitHub Copilot to help me get started to build data applications is incredible, all right within the comfort of tools like Visual Studio or VS Code. But there are some other new experiences people may not know about -- our Microsoft Copilot story -- that will help developers. And we can't wait to show it to you during the keynote.

What future trends do you foresee in the integration of AI and database technologies?

Right now it feels the huge need is to build generative AI applications, but with their data. And there are many different methods to do this. So, what I see in the future is a merging of these methods into a smaller set of options that give developers the best way to build these applications quickly. I also see the concept of hybrid searching and even the use of small language models to become more popular over time. And per the previous question on Copilots, I believe we will see an explosion of this to help developers build and ship code faster but more efficient, performant, secure and with fewer bugs.

What do you hope people take away from your keynote?

The most important takeaway I want people to get from the keynote is that Azure Databases all-up are fully managed cloud database services you can rely on today and in the future to build intelligent AI data-driven applications. Our team is committed to staying on top of all the new innovations in AI and ensuring developers have what they need for real-world applications that are data driven using the power of AI without having to give up on the tried-and-true database capabilities of security, scalability and availability.

Note: Those wishing to attend the conference can save hundreds of dollars by registering early, according to the event's pricing page. "Register for VSLive! Las Vegas by the Feb. 9 Extended Early Bird Deadline to save $300 and secure your seat for intensive developer training in exciting Las Vegas!" said the organizer of the developer conference.

Posted by David Ramel on 02/05/20240 comments

Hey, everyone! I'm Mickey Gousset, a staff DevOps architect at GitHub, and I am thrilled to be contributing to the VSLive! blog as a monthly how-to columnist. While every month you can expect tips, tricks and best-practices advice from the development world, for this installment, I thought I'd share some of my predictions for 2024 in the field of software development.

Predicting the future of software development is always an intriguing task, as the field is rapidly evolving. Here are my top three predictions for the software development landscape in 2024.

1. The Rise of AI-Driven Development

OK, you knew this was going to be at the top of the list. ChatGPT took the world by storm in 2023. AI technologies, like machine learning and natural language processing, are becoming sophisticated enough to assist in code generation, bug fixes and even in making design decisions.

In 2024, tools like GitHub Copilot that suggest code snippets will become more advanced, helping reduce development time and improve code quality. AI will play a more integral role in software development, from initial design to testing and deployment. AI-driven development tools will continue to enhance developer productivity and creativity.

If you're curious about all this, look into attending VSLive! Las Vegas this year, where we have an entire track dedicated to "cutting-edge AI."

2. Growth in Cross-Platform Development

The demand for cross-platform development tools will continue to increase, as businesses seek to target multiple platforms (iOS, Android, Web, desktop) simultaneously.

As the mobile market continues to grow and the lines between desktop and mobile blur, developers are looking for efficient ways to build applications for multiple platforms. Cross-platform frameworks provide a cost-effective solution, enabling a single codebase to run on various platforms. We can expect significant improvements in these frameworks, with better performance, more native features and easier debugging.

For example, MAUI is a cross-platform framework introduced by Microsoft as part of .NET 6. It's an evolution of Xamarin.Forms, designed to created native mobile and desktop apps with a single codebase. You can learn more about MAUI on the "Developing New Experiences" track at VSLive! Las Vegas.

3. Continued Emphasis on DevOps and Automation

DevOps practices and automation will become more deeply ingrained in the software development process. Continuous integration and deployment (CI/CD) will continue to evolve, and automation will extend to more areas, such as security (DevSecOps).

The integration of development and operations has been proven to enhance efficiency, reduce errors and speed up deployment. In 2024, we can expect DevOps to evolve further, incorporating more automated processes all while not sacrificing quality. Tools that facilitate continuous testing, integration and monitoring will be crucial. The integration of security into the DevOps pipeline will also be a major focus.

If you're going to be at VSLive! Las Vegas, you can learn the latest on these evolutions in the "Modern Software Engineering" track.

Now, I don't have a crystal ball. I'm sure there is a trend I may have missed, or one that will jump to the top of the stack. But these three areas are definitely worth keeping an eye on. And keep an eye on this blog for future information, tips and tricks for the development world.

Posted by Mickey Gousset on 01/17/20240 comments

Microsoft Fabric was described as "the AI-fication of Microsoft's data business" by RedmondMag writer Joey D'Antoni when it was unveiled at the company's Build 2023 developer conference.

He listed these highlights of the new offering:

- Microsoft's Fabric is a comprehensive data analytics platform that integrates various data tools into a single SaaS offering, aiming to eliminate data silos and promote data sharing within organizations.

- Fabric leverages the concept of data fabric, combining modern trends like data lakes, the delta store, and parquet file formats, presented through a set of standard APIs.

- Fabric incorporates AI capabilities, including Power BI Copilot for DAX language and natural language query functionality. It also introduces Data Activator, a service that monitors data changes and triggers actions, resembling Logic Apps or Power Automate.

"Fabric is a big, bold step from Microsoft," D'Antoni said. "Competitors like Snowflake and partners like Databricks have been making inroads into a traditionally strong business intelligence and analytics market for Microsoft. While Fabric will remain a work in progress for some time, the level of investment and direction from the company in data analytics is promising."

[Click on image for larger view.] Microsoft Fabric (source: Microsoft).

[Click on image for larger view.] Microsoft Fabric (source: Microsoft).

That's a lot of functionality to take in via an article or two, so a hands-on, interactive, step-by-step presentation demonstration on creating Extract, Transform, Load (ETL) pipelines using Fabric might help Microsoft-centric developers get a better handle on the technology.

Luckily, Microsoft's Sally Dabbah is going to helm just such a presentation -- titled "Step-by-Step Guide: Building ETL Workflows in Microsoft Fabric" -- at the March Visual Studio Live! developer conference in Las Vegas.

"This hands-on session will empower attendees to gain a deep understanding of the ETL process, equipping them with practical skills to efficiently manage and transform their organizational data," said Dabbah, who established herself as a significant voice in Azure Cloud Analytics Services by posting regularly on Microsoft's Tech Community blog.

"By attending this session, participants will discover how to harness the power of Microsoft Fabric for seamless data integration, ensuring they can extract valuable insights from their data," she continued.

Attendees are promised to learn:

- Master ETL Workflow Creation: By the end of the session, attendees will be proficient in building ETL workflows from scratch, understanding the essential steps involved in data extraction, transformation, and loading

- Gain In-Depth Fabric Knowledge: Participants will acquire a thorough understanding of Microsoft Fabric, enabling them to leverage its features and capabilities for data integration and management effectively and to know better fabric concepts such as: OneLake,OneCopy, OneSecurity and so on.

- Enhance Data Insight Capabilities: This session will equip attendees with the skills needed to unlock valuable insights from their data, ultimately leading to more informed decision-making and improved organizational performance"

We recently caught up with Dabbah to learn more about her 75-minute, introductory-level presentation in a short Q&A.

VSLive! What inspired you to present a session on building ETL Workflows in Microsoft Fabric?

Dabbah: My inspiration came from recognizing the growing need for efficient data handling in today's data-driven world. As organizations increasingly rely on large and complex datasets, the ability to effectively extract, transform and load data becomes crucial. Microsoft Fabric, with its robust capabilities, offers an innovative solution. This session aims to demystify the process and empower professionals to harness these tools effectively.

For those unfamiliar, could you briefly explain what ETL (Extract, Transform, Load) workflows are, and why Microsoft Fabric is a significant tool in this context?

ETL workflows are processes used to extract data from various sources, transform it into a structured format and load it into a target system for analysis and reporting.

"Microsoft Fabric stands out in this context due to its scalability, integration options and advanced features, making it an ideal platform for managing complex data integration tasks."

"Microsoft Fabric stands out in this context due to its scalability, integration options and advanced features, making it an ideal platform for managing complex data integration tasks."

Sally Dabbah, Data Engineer, Azure Cloud Analytics Services, Microsoft

Microsoft Fabric stands out in this context due to its scalability, integration options and advanced features, making it an ideal platform for managing complex data integration tasks.

Your session promises a comprehensive, step-by-step demonstration. Can you give us an overview of what this hands-on approach will look like and how it will benefit attendees, especially those new to ETL workflows?

The session will be structured as a practical, step-by-step guide. Attendees will be walked through the creation of an ETL workflow, starting from data extraction to final loading. This approach will be particularly beneficial for those new to ETL, providing them with a solid foundation and practical skills that can be immediately applied.

Microsoft Fabric offers various features for data integration. Could you highlight some key features or tools within Fabric that are particularly beneficial for building ETL workflows?

Microsoft Fabric offers several features that make it stand out for ETL workflows, such as high scalability, robust data processing capabilities and advanced data integration tools. I will highlight features like real-time data processing and customizable workflow options that cater to various business needs.

You mention that attendees will learn about advanced Fabric concepts like OneLake, OneCopy, OneSecurity and so on. Can you elaborate on how understanding these concepts will enhance their ETL workflow creation?

OneLake, OneCopy, OneSecurity: These advanced concepts represent the cutting-edge aspects of Microsoft Fabric. Understanding OneLake, OneCopy and OneSecurity enables users to manage data more securely, efficiently and in a unified manner. This knowledge enhances the ability to create more sophisticated and secure ETL workflows.

How will mastering ETL workflows in Microsoft Fabric enable attendees to unlock valuable insights from their data, and how can this lead to improved decision-making and organizational performance?

Proficiency in ETL workflows using Microsoft Fabric allows professionals to unlock deep insights from their data, leading to more informed decision-making and improved organizational performance. It equips them with the tools to handle complex data scenarios, thereby enhancing their data analytics capabilities.

Looking ahead, what are some emerging trends or advancements in ETL workflows and data integration that professionals should be aware of, and how does Microsoft Fabric fit into this evolving landscape?

The field of ETL and data integration is rapidly evolving, with trends like real-time data processing, cloud-based integration, and AI-driven analytics becoming more prevalent. Microsoft Fabric is well-positioned in this landscape, offering a platform that adapts to these emerging trends while continuing to provide robust and scalable solutions for ETL workflows.

Note: Those wishing to attend the conference can save hundreds of dollars by registering early, according to the event's pricing page. "Register for VSLive! Las Vegas by the Super Early Bird Deadline (Jan. 16) to save up to $400 and secure your seat for intensive developer training in exciting Las Vegas!" said the organizer of the developer conference.

Posted by David Ramel on 01/15/20240 comments

The consensus is that we are entering a new world following the COVID-19 pandemic, so this might be the time for developers to add to their skillset. The more skills you have the better you are positioned for jobs in the future where multifaceted developers will be in demand.

Companies restarting their business may not have the budget for hiring different developers for different tasks. They may need old-fashioned Jack-or-Jill-of-all-trades. Combining classic application development expertise with Website abilities may be just the ticket for success when the economy begins reopening.

For developers with desktop application development skills, gaining Web frontend language abilities would give you the ability to see Web apps as a whole, which could be valuable to a DevOps team. Or if you are interested in transitioning to a career in Web development, this is also the time to make your move.

If you are looking to add to your personal knowledge base, Visual Studio Live! has a seminar is for you! No prior experience is necessary. Become a Web Developer Quick and Easy is scheduled for June 29-30, 2020. It will be a virtual course you can attend online without leaving your home office, which is ideal for the current public health situation.

This seminar is designed for developers who have little to no proficiency with HTML, CSS, Bootstrap, MVC, JavaScript, jQuery or the Web API but would like to become knowledgeable in these standard Web building languages. However, familiarity with Visual Studio, Visual Studio Code, C#, and .NET is recommended. This is a two-day virtual course that will transform you into a Web developer.

The course is designed for any desktop programmer who wants to learn to develop Web applications using .NET and C#. Or, for a Web developer who would like to renew his knowledge of the basics of Web languages.

Here’s what the course covers:

As you know, HTML is the fundamental building block of Web development. You will get a thorough overview and understanding of what goes into creating a Web page. The basics of HTML elements and attributes are illustrated through multiple examples.

You’ll learn professional HTML techniques to make Website look great, be more efficient, and easer to maintain? To do this, Cascading Styles Sheets (CSS) are the answer. This part of this seminar serves as an introduction to CSS. You will learn the best practices for working with CSS in your HTML applications.

Today’s Websites are being accessed from a range of devices including traditional desktop PCs as well as tablets and smartphones. Developing Websites that are responsive to different size devices is easy when you use the right tools. Twitter’s Bootstrap is the tool of choice these days. Not only is it free, but it also has many free themes that allow you to modify the look and feel quickly. Learning bootstrap is easy with the resources available on the Web, however, having someone walk you through the basics step-by-step will greatly increase your learning.

Microsoft's MVC Razor language in Visual Studio is a great way to build Web applications quickly and easily. In this seminar you are introduced how to get started building your first MVC application.

The de-facto standard language for programming Web pages is JavaScript and the jQuery library. You will be introduced to both JavaScript and the jQuery. You learn to create functions, declare and use variables, interact with, and manipulate, elements on Web pages using both JavaScript and jQuery.

The Web API is fast becoming a requirement for developers to use to build Web applications. Whether you use jQuery, Angular, React or other JavaScript framework to interact with data, you need the Web API. You will be shown step-by-step how to get, post, put and delete data using the Web API.

You will come away from this virtual seminar with new skills including:

- HTML, CSS and Bootstrap Fundamentals

- MVC and Web API Fundamentals

- JavaScript and jQuery Fundamentals

Sign up today: Become a Web Developer Quick and Easy

Posted by Richard Seeley on 04/22/20200 comments

Continuous Integration and Continuous Deployment (CI/CD) is great in theory but like its Agile and DevOps companions it is not the easiest thing to put into practice.

GitHub Actions, introduced in the past year, are intended to make CI/CD easier for developer teams to implement. If you are struggling to keep your DevOps culture on track and make Agile work, it might be worth seeing if GitHub Actions can help. At least, GitHub and its new Microsoft owners sure hope so. Since Microsoft purchased San Francisco-based GitHub in 2018, some of the Redmond, Washington technology and marketing magic seems to have infused the company.

In the Visual Studio community there were once rumors that GitHub actually slowed things down. But now the Microsoft-owned company is aggressively touting robustly named Actions as a way to keep Agile workflows rolling.

Understanding Actions

GitHub Actions comes in the form of an API, which can be used to orchestrate any workflow, and support CI/CD, as explained in this Visual Studio magazine article. Microsoft promised that Actions would “let developers and others orchestrate workflows based on events and then let GitHub take care of the execution and details,” explained Converge360 editor David Ramel. “These workflows or pipelines can be just about anything to do with automated software compilation and delivery, including building, testing and deploying applications, triaging and managing issues, collaboration and more.”

This orchestration tool is something like the mythical wrench that works for every job you have.

“GitHub Actions now makes it easier to automate how you build, test, and deploy your projects on any platform, including Linux, macOS, and Windows,” Microsoft said in introducing the CI/CD capabilities this past summer. “Run your workflows in a container or in a virtual machine. Actions also supports more languages and frameworks than ever, including Node.js, Python, Java, PHP, Ruby, C/C++, .NET, Android, and iOS. Testing multi-container apps? You can now test your Web service and its database together by simply adding some docker-compose to your workflow file.”

GitHub offers help for developers as they begin to use the new technology. For example, when Actions are enabled on a repository, GitHub will provide suggestions for appropriate workflows according to the project.

GitHub Actions for Azure

Almost simultaneously with the announcement of the CI/CD capabilities, Microsoft also announced GitHub Actions for Azure with more support for developers new to Actions.

"You can find our first set of Actions grouped into four repositories on GitHub,” Microsoft said, “each one containing documentation and examples to help you use GitHub for CI/CD and deploy your apps to Azure." The initial list of repositories to check out included:

- azure/actions (login): Authenticate with an Azure subscription.

- azure/appservice-actions: Deploy apps to Azure App Services using the features Web Apps and Web Apps for Containers.

- azure/container-actions: Connect to container registries, including Docker Hub and Azure Container Registry, as well as build and push container images.

- azure/k8s-actions: Connect and deploy to a Kubernetes cluster, including Azure Kubernetes Service (AKS).

Getting Started with GitHub Actions at Microsoft HQ

If you want to get down to the nitty gritty with GitHub Actions, Mickey Gousset, DevOps Architect at Microsoft, is teaching a session on it at the Visual Studio Live! Microsoft HQ event this summer.

Gousset will show how GitHub Actions enables you to create custom software development lifecycle workflows directly in your GitHub repository. You can create tasks, called "actions", and combine them to create custom workflows to build, test, package, release and/or deploy any code project on GitHub. In this session you will learn the ins and outs of GitHub Actions, and walk away with workflows and ideas that you can start using in your own repos immediately.

You will learn:

- What GitHub Actions are and why you care

- How to build/release your GitHub repos using GitHub Actions

- Tips/tricks for your YAML files

Sign up for Visual Studio Live! Microsoft HQ today!

Posted by Richard Seeley on 03/24/20200 comments

We caught up with Jason Bock, a practice lead for Magenic (http://www.magenic.com) and a Microsoft MVP (C#) with more than 20 years of experience working on business applications using a diverse set of frameworks and languages including C#, .NET, and JavaScript.

Bock, the author of ".NET Development Using the Compiler API," "Metaprogramming in .NET," and "Applied .NET Attributes, is leading the full-day “Visual Studio 2019 In-depth” workshop at the upcoming “Visual Studio Live!” conference set for March 30 - April 3 in Austin, Texas.

The workshop begins with the premise that the capabilities and features within Visual Studio are vast, so much so that users may not be aware of everything that Visual Studio has to offer. In the full-day session, Bock will give a fast-paced tour of the Visual Studio landscape, including configuration, debugging, code analysis, unit testing, performance, metrics and more. Attendees will also find out about new features that are in Visual Studio 2019. By the end of this session, developers will have a solid understanding of Visual Studio so they can quickly develop reliable, maintainable solutions including:

- Understanding the vast Visual Studio ecosystem

- Gaining insights into Visual Studio analysis tools to improve applications

- Finding out how to extend Visual Studio with extensions and templates

Ahead of the workshop, we asked Bock about Visual Studio 2019 and he even shared his favorite little known feature with us.

Question: You have a full-day workshop deep-diving into Visual Studio 2019, so hopefully this isn’t too much of a spoiler, but what is your number #1 feature in VS2019 that you’re surprised more developers don’t know about?

Answer: There are a lot of features in Visual Studio, so even if you’ve used it for years, there’s always something new to find. One addition was the support of .editorconfig files, which defines the formatting styles for a project. If you have that file with a solution, VS will automatically honor those settings, so you no longer have to ensure VS is configured to match the formatting expectations.

Q: What do you think is the most powerful aspect of VS 2019 compared to other versions?

A: There are always new features added to VS, but to me, the biggest change for VS is the frequency of updates and revisions. In the old days, updates to VS were infrequent, service packs could completely invalidate an installation, and using preview versions could only be done within a virtual machine. You just couldn’t trust updates or preview versions. Now, with VS 2017 and 2019, the installation process is much cleaner, and preview versions are truly separated from the “main” version. It’s not perfect, but it’s a big improvement from the way things were.

Q: What is your personal favorite little-known or unknown feature?

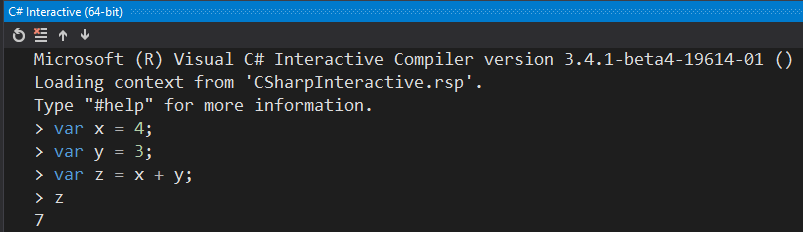

A: C# Interactive.  You can see that you can use C# as a scripting language within VS. It’s somewhat bare-bones in terms of the features it has, but it’s nice if you want to try out some C# code within VS outside of a solution.

You can see that you can use C# as a scripting language within VS. It’s somewhat bare-bones in terms of the features it has, but it’s nice if you want to try out some C# code within VS outside of a solution.

Q: What are your go-to Visual Studio tools?

A: I’m not a developer that uses a lot of tools in terms of extensions. I pretty much stick to what’s in VS, which turns out, there’s more there than people realize. The things I watch out for are new refactorings and key bindings with new versions of VS to enhance the coding experience.

Q: If we let you have one more extension, what would it be?

A: This may be surprising to some developers, but I stick to the tools that come with VS, and that typically is more than enough for what I do. I know there are some extensions that .NET developers insist you must use, but knowing what VS has out of the box, especially with recent versions, can be somewhat of an eye-opening experience.

Q: Anything else that we haven’t asked you that you wish we had?

A: The one thing I’d like to encourage developers is to get familiar with the key bindings and shortcuts within VS. The more you can keep your hands off the mouse, the faster you’ll be. Using Quick Help (Ctrl + Q) to discover features in VS along with any associated key bindings.

Posted by Richard Seeley on 02/21/20200 comments

Microsoft’s Cosmos isn’t a new database. It’s been around since 2010. But when coupled with Azure, its globally-distributed database in the cloud model seems ideal for business app developers in 2020.

“Cosmos DB enables you to build highly responsive and highly available applications worldwide,” touts Microsoft in its introduction to its NoSQL database. “Cosmos DB transparently replicates your data wherever your users are, so your users can interact with a replica of the data that is closest to them.”

The proximity of data to users is important because speed thrills when it comes to building business apps in the global mobile world. Users expect to see the information they need in a blink of an eye and that’s what Azure Cosmos provides.

“You can elastically scale throughput and storage, and take advantage of fast, single-digit-millisecond data access using your favorite API including SQL, MongoDB, Cassandra, Tables, or Gremlin,” Microsoft explains.

The elastic scalability of Azure Cosmos provides the flexibility business apps need where market demands can go up and down within hours, minutes or even seconds.

“You can elastically scale up from thousands to hundreds of millions of requests/sec around the globe, with a single API call and pay only for the throughput (and storage) you need,” according to Microsoft. “This capability helps you to deal with unexpected spikes in your workloads without having to over-provision for the peak.”

Retail and Marketing

Applications that can handle the peaks and valleys of the retail industry are among the use cases that Microsoft highlights for Azure Cosmos. Here the company eats its own dog food: “Azure Cosmos DB is used extensively in Microsoft's own e-commerce platforms that run the Windows Store and XBox Live.”

Beyond its own applications, Microsoft says Cosmos is designed to handle online retail functions such as storing catalog data and event sourcing in order processing pipelines:

“Catalog data usage scenarios involve storing and querying a set of attributes for entities such as people, places, and products. Some examples of catalog data are user accounts, product catalogs, IoT device registries, and bill of materials systems. Attributes for this data may vary and can change over time to fit application requirements.

“Consider an example of a product catalog for an automotive parts supplier. Every part may have its own attributes in addition to the common attributes that all parts share. Furthermore, attributes for a specific part can change the following year when a new model is released. Azure Cosmos DB supports flexible schemas and hierarchical data, and thus it is well suited for storing product catalog data.”

Cosmos Case Study

Microsoft backs up its claims for Azure Cosmos with case studies of the database in action from a number of prestigious customers.

One Microsoft customer story highlights how FUJIFILM employed Azure Cosmos database speed to enhance their customers’ experience. The Japanese company, which has transitioned from the photographic film business to become a leader in digital photography, wanted to improve digital photo management and file sharing for users of its IMAGE WORKS service. Photographers are typically anxious to see and share their work as instantly as possible. Using Azure Cosmos DB for its image file database, FUJIFILM was able to provide users with higher responsiveness and lower latency from IMAGE WORKS. Microsoft noted that response time for end users accelerated by a factor of 10 while some photographer interactions accelerated by 20 times and more over the previous database. With Azure Cosmos, a tree view photo display in image search processing, which included 140 tables and 1,000 rows of SQL queries, went from 45 seconds, which would seem like an eternity to impatient shutterbugs, to two seconds. “The more responsive we can make IMAGE WORKS, the more productive our customers can be,” said Yuki Chiba, Design Leader of the Advanced Solutions Group IMAGE WORKS Team at FUJIFILM Software.

Deep Dive on Cosmos DB

If you want to go beyond reading about it and really explore the Cosmos, Visual Studio Live! coming to Austin, TX March 30 through April 3 offers a full afternoon Deep Dive on Cosmos DB. Leonard Lobel, Microsoft MVP and CTO at Sleek Technologies, Inc., will lead developers on a journey through the Cosmos DB starting with an introduction including its multi-model capabilities which allow you to store and query schema-free JSON documents (using either SQL or MongoDB APIs), graphs (Gremlin API), and key/value entities (table API).

Diving deeper, in Part II, “Building Cosmos DB Applications,” you'll learn how to write apps for Cosmos DB, and see how to work with the various Cosmos DB APIs. These APIs support a variety of data models, including the SQL API (for JSON documents), Table API for (key-value entities), Gremlin API (for graphs) and Cassandra (for columnar). Regardless what you choose as a data model of choice, you'll learn how to provision throughput, and how to partition and globally distribute your data to deliver massive scale. You’ll come away knowing how to:

- Write code to build Cosmos DB applications

- Use the Cosmos DB server-side programming model to run stored procedures, triggers, and user-defined functions and explore the special version of SQL designed for querying Cosmos DB

- Use the Cosmos DB server-side programming model to run stored procedures, triggers, and user-defined functions

Sign up for the Visual Studio Live! Austin Code Trip today!

Posted by Richard Seeley on 02/13/20200 comments

What's SAFe? Are you doing Scrum? This might sound like dialogue outtakes from an old movie. But it is actually about alternative approaches to application development.

Scrum is sometimes shown in all caps as SCRUM but it is not an acronym. SAFe on the other hand stands for Scaled Agile Framework. Both have official websites: https://www.scrum.org/ and https://www.scaledagileframework.com/.

Both are approaches to Agile development. In the case of SAFe, Agile is its middle name. This whole thing started earlier in the decade when a group of developers published The Manifesto for Agile Software Development. The idea was to get away from older and cumbersome coding practices, which despite everybody's best intentions for detailed planning often started with little more than a manager saying: "I need you guys to write a program that [fill in the blank].

Because of heroic efforts by developers, that method usually worked, otherwise we would have had no applications to run since the personal computer revolution. And there was the Waterfall Method that organized development into steps starting with writing requirements (what a concept!) for the planned application. Then it went to phases of design, coding, QA, production and maintenance. Often those phases were handled by different teams with limited interaction or feedback. It worked more or less like an automobile assembly line. But it was rarely a collaborative process.

Agile is a collaborative process starting with a new emphasis on:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

There are 12 guiding principles to Agile that you can read about here. This is great but it's what MBA types call high level. You can see the whole battlefield from 10,000 feet. But what's going on in the trenches? On a day-to-day basis when you are trying to produce an app, what is everybody supposed to be doing? That's where SAFe and Scrum comes into play. They are essentially ways to actually do Agile development. They take different approaches, so you might ask: "What's the difference between SAFe and Scrum?"

Krishna Ramalingam gives basic answers to that question in a seven-minute YouTube video:

"Bottom line both are offshoots of Agile principles," he tells his audience. "Both talk about releasing in short iterations. The fundamental difference with SAFe is Scaled Agile Framework. Scrum focused on a smaller project unit and provides the framework on how this has to be handled."

With Scrum a team is working in iterations called sprints, Ramalingam explains. He uses the example of 10 teams working on 10 projects that will eventually come together as one product.

"All the 10 projects start and stop the sprint at the same time," he explains. "This way planning dependencies become easier and it also gives a view of what each of the teams can implement so as to deliver a bigger meaningful feature for the product level. So you align all the teams and you start sprinting."

A sprint might be three weeks and after that there would be a one week "hardening sprint" where the teams address issues that come up in the review. The advantage of this approach is that all the team members have an idea of what is going on with the other projects. It helps avoid that dreadful question: "Why did you do that?" With this kind of coordinated effort, ideally the 10 projects come together in one app.

"At the end of this you do a complete product level demo and promote the code to the production server and make a formal product release," Ramalingam explains.

But Scrum leaves some of the nitty gritty parts of the project up to the team members to figure out on their own, he adds. This is where SAFe offers more guidance. It covers things like "how budgeting should be done, the strategy and the governance under which the agile release trains are executed," he says. It offers more guidance on how 10 projects and be coordinated so they become one final application. Ramalingam sees this as something best suited for larger organizations.

"Should you follow Scrum or SAFe in your organization?" he asks at the conclusion of his video. "If you're starting out new in a small way or have independent small projects it would be best to implement Scrum. As your organization matures, you may eventually evolve practices that are similar to SAFe. If you have a large organization and want to go all out in your strategic planning down to the bottom level engineer, you may be better off starting with SAFe. There is no right or wrong answer. Understand the benefits and pitfalls of both the frameworks and choose wisely."

To achieve that level of understanding and make informed choices, you need an in-depth look at these frameworks. You can find out much more about both SAFe and Scrum at VS Live in Las Vegas, a six-day event running March 1 – 6. Learn about all the Code Trip here.

Posted by Richard Seeley on 12/12/20190 comments

Business Intelligence (BI), which like government intelligence sounds faintly like an oxymoron, has been around a long time. The earliest reference to business intelligence appeared in 1865, according to a Wikipedia article. In more modern times, the term started to appear at IBM in the late 1950s but Gartner is quoted as saying BI didn't gain traction in the corporate world until the 1990s. So it appears to be a term coined at the end of the Civil War that then moved into common usage with Decision Support Systems for data-based tactics and strategies, which were developed from 1965 to 1985. Any way you look at it BI is not new technology.

Fast forwarding to the present where massive amounts of data are being gathered from the huge surge in IoT devices and processed by increasingly sophisticated Artificial Intelligence software, the new term is Business Analytics. This is not your grandfather's punch cards being fed into water-cooled mainframe computers. It's not even business analysts working with Lotus 123 on MS-DOS PCs in 1985. Business Analytics is a whole new ballgame and if you want to play in the game, you may need to upgrade your skillset.

How Much Data Is Too Much?

With IoT and other edge computing systems collecting billions and billions of event and process data, some industry watchers are predicting a "Data-apocalypse" where there will be more information than cloud storage can handle or AI can analyze. But a more optimistic view is that the vast amounts of data being gathered globally present an unprecedented opportunity to give business decision makers a wealth of facts organized in applications for developing tactics and strategies. How are products really doing in the marketplace? Are the processes that create those products as efficient and safe as they could be? Who actually wants to buy what you are selling? Business analytics offers the potential to greatly reduce the guesswork that amounted to little more than a coin flip in old time decision making.

From Theory to Applications

Of course, this is not going to magically appear on business users desktops. Applications need to be built to meet the specific business analytics needs of individual companies, departments and groups. There are tools developers can use to get those jobs done, including Microsoft Data Platform technologies such as SQL Server, Azure SQL Server, and even good old Power BI.

There is news this month on updates to some of the Microsoft technologies specifically to help handle big data.

On Nov. 5, Azure Data Studio was released which is designed to provide multi-database, cross-platform desktop environment for data professionals using the family of on-premises and cloud data platforms on Windows, MacOS, and Linux. More information about it is available on this GitHub page.

The recently released SQL Server 2019 (15.x), SQL Server Big data Clusters is designed to "allow you to deploy scalable clusters of SQL Server, Spark, and HDFS containers running on Kubernetes," as explained in a Microsoft post What are SQL Server Big Data Clusters? "These components are running side by side to enable you to read, write, and process big data from Transact-SQL or Spark, allowing you to easily combine and analyze your high-value relational data with high-volume big data. SQL Server Big Data Clusters provide flexibility in how you interact with your big data ... You can query external data sources, store big data in HDFS managed by SQL Server, or query data from multiple external data sources through the cluster. You can then use the data for AI, machine learning, and other analysis tasks."

That Microsoft post provides scenarios for making use of big data for analysis tasks utilizing AI and machine learning.

Get Up to Speed with an Expert

If you are interested in enhancing your Business Analytics skill set including gaining greater knowledge of the Microsoft Data Platform technologies such as SQL Server, Azure SQL Server, and more, there's a workshop for you this month at Visual Studio Live! From Business Intelligence to Business Analytics with the Microsoft Data Platform is an all-day workshop that is part of Visual Studio Live! in Orlando, Florida, Nov. 17 -22. It is being taught by big data expert Jen Stirrup, who is a Microsoft Data Platform MVP.

This workshop will cover real-life scenarios with takeaways that you can apply as soon as you go back to your company.

Here are the topics included:

- Introduction to Analytics with the Microsoft Data Platform

- Essential Business Statistics for Analytics Success: the important statistics that business users use often in business spheres, such as marketing and strategy.

- Business Analytics for your CEO – what information does your CEO really care about, and how can you produce the analytics that she really wants? In this session, we will go through common calculations and discuss how these can be used for business strategy, along with their interpretation.

- Analytics for Marketing – what numbers do they need, why, and what do they say? In this session, we will look at common marketing scenarios for analytics, and how they can be implemented with the Microsoft Data Platform.

- Analytics for Sales – what numbers do they need on a sales dashboard, why, and what do they say? In this session, we will look at common sales scenarios for analytics such as forecasting and 'what if' scenarios, and how they can be implemented with the Microsoft Data Platform.

- Analytics with Python – When you really have difficult data to crunch, Python is your secret Power tool.

- Business Analytics with Big Data – let's look at big data sources and how we can do big data analytics with tools in Microsoft's Data Platform.

You can find out more about Visual Studio Live! in Orlando here.

Posted by Richard Seeley on 11/20/20190 comments