The consensus is that we are entering a new world following the COVID-19 pandemic, so this might be the time for developers to add to their skillset. The more skills you have the better you are positioned for jobs in the future where multifaceted developers will be in demand.

Companies restarting their business may not have the budget for hiring different developers for different tasks. They may need old-fashioned Jack-or-Jill-of-all-trades. Combining classic application development expertise with Website abilities may be just the ticket for success when the economy begins reopening.

For developers with desktop application development skills, gaining Web frontend language abilities would give you the ability to see Web apps as a whole, which could be valuable to a DevOps team. Or if you are interested in transitioning to a career in Web development, this is also the time to make your move.

If you are looking to add to your personal knowledge base, Visual Studio Live! has a seminar is for you! No prior experience is necessary. Become a Web Developer Quick and Easy is scheduled for June 29-30, 2020. It will be a virtual course you can attend online without leaving your home office, which is ideal for the current public health situation.

This seminar is designed for developers who have little to no proficiency with HTML, CSS, Bootstrap, MVC, JavaScript, jQuery or the Web API but would like to become knowledgeable in these standard Web building languages. However, familiarity with Visual Studio, Visual Studio Code, C#, and .NET is recommended. This is a two-day virtual course that will transform you into a Web developer.

The course is designed for any desktop programmer who wants to learn to develop Web applications using .NET and C#. Or, for a Web developer who would like to renew his knowledge of the basics of Web languages.

Here’s what the course covers:

As you know, HTML is the fundamental building block of Web development. You will get a thorough overview and understanding of what goes into creating a Web page. The basics of HTML elements and attributes are illustrated through multiple examples.

You’ll learn professional HTML techniques to make Website look great, be more efficient, and easer to maintain? To do this, Cascading Styles Sheets (CSS) are the answer. This part of this seminar serves as an introduction to CSS. You will learn the best practices for working with CSS in your HTML applications.

Today’s Websites are being accessed from a range of devices including traditional desktop PCs as well as tablets and smartphones. Developing Websites that are responsive to different size devices is easy when you use the right tools. Twitter’s Bootstrap is the tool of choice these days. Not only is it free, but it also has many free themes that allow you to modify the look and feel quickly. Learning bootstrap is easy with the resources available on the Web, however, having someone walk you through the basics step-by-step will greatly increase your learning.

Microsoft's MVC Razor language in Visual Studio is a great way to build Web applications quickly and easily. In this seminar you are introduced how to get started building your first MVC application.

The de-facto standard language for programming Web pages is JavaScript and the jQuery library. You will be introduced to both JavaScript and the jQuery. You learn to create functions, declare and use variables, interact with, and manipulate, elements on Web pages using both JavaScript and jQuery.

The Web API is fast becoming a requirement for developers to use to build Web applications. Whether you use jQuery, Angular, React or other JavaScript framework to interact with data, you need the Web API. You will be shown step-by-step how to get, post, put and delete data using the Web API.

You will come away from this virtual seminar with new skills including:

- HTML, CSS and Bootstrap Fundamentals

- MVC and Web API Fundamentals

- JavaScript and jQuery Fundamentals

Sign up today: Become a Web Developer Quick and Easy

Posted by Richard Seeley on 04/22/20200 comments

Continuous Integration and Continuous Deployment (CI/CD) is great in theory but like its Agile and DevOps companions it is not the easiest thing to put into practice.

GitHub Actions, introduced in the past year, are intended to make CI/CD easier for developer teams to implement. If you are struggling to keep your DevOps culture on track and make Agile work, it might be worth seeing if GitHub Actions can help. At least, GitHub and its new Microsoft owners sure hope so. Since Microsoft purchased San Francisco-based GitHub in 2018, some of the Redmond, Washington technology and marketing magic seems to have infused the company.

In the Visual Studio community there were once rumors that GitHub actually slowed things down. But now the Microsoft-owned company is aggressively touting robustly named Actions as a way to keep Agile workflows rolling.

Understanding Actions

GitHub Actions comes in the form of an API, which can be used to orchestrate any workflow, and support CI/CD, as explained in this Visual Studio magazine article. Microsoft promised that Actions would “let developers and others orchestrate workflows based on events and then let GitHub take care of the execution and details,” explained Converge360 editor David Ramel. “These workflows or pipelines can be just about anything to do with automated software compilation and delivery, including building, testing and deploying applications, triaging and managing issues, collaboration and more.”

This orchestration tool is something like the mythical wrench that works for every job you have.

“GitHub Actions now makes it easier to automate how you build, test, and deploy your projects on any platform, including Linux, macOS, and Windows,” Microsoft said in introducing the CI/CD capabilities this past summer. “Run your workflows in a container or in a virtual machine. Actions also supports more languages and frameworks than ever, including Node.js, Python, Java, PHP, Ruby, C/C++, .NET, Android, and iOS. Testing multi-container apps? You can now test your Web service and its database together by simply adding some docker-compose to your workflow file.”

GitHub offers help for developers as they begin to use the new technology. For example, when Actions are enabled on a repository, GitHub will provide suggestions for appropriate workflows according to the project.

GitHub Actions for Azure

Almost simultaneously with the announcement of the CI/CD capabilities, Microsoft also announced GitHub Actions for Azure with more support for developers new to Actions.

"You can find our first set of Actions grouped into four repositories on GitHub,” Microsoft said, “each one containing documentation and examples to help you use GitHub for CI/CD and deploy your apps to Azure." The initial list of repositories to check out included:

- azure/actions (login): Authenticate with an Azure subscription.

- azure/appservice-actions: Deploy apps to Azure App Services using the features Web Apps and Web Apps for Containers.

- azure/container-actions: Connect to container registries, including Docker Hub and Azure Container Registry, as well as build and push container images.

- azure/k8s-actions: Connect and deploy to a Kubernetes cluster, including Azure Kubernetes Service (AKS).

Getting Started with GitHub Actions at Microsoft HQ

If you want to get down to the nitty gritty with GitHub Actions, Mickey Gousset, DevOps Architect at Microsoft, is teaching a session on it at the Visual Studio Live! Microsoft HQ event this summer.

Gousset will show how GitHub Actions enables you to create custom software development lifecycle workflows directly in your GitHub repository. You can create tasks, called "actions", and combine them to create custom workflows to build, test, package, release and/or deploy any code project on GitHub. In this session you will learn the ins and outs of GitHub Actions, and walk away with workflows and ideas that you can start using in your own repos immediately.

You will learn:

- What GitHub Actions are and why you care

- How to build/release your GitHub repos using GitHub Actions

- Tips/tricks for your YAML files

Sign up for Visual Studio Live! Microsoft HQ today!

Posted by Richard Seeley on 03/24/20200 comments

We caught up with Jason Bock, a practice lead for Magenic (http://www.magenic.com) and a Microsoft MVP (C#) with more than 20 years of experience working on business applications using a diverse set of frameworks and languages including C#, .NET, and JavaScript.

Bock, the author of ".NET Development Using the Compiler API," "Metaprogramming in .NET," and "Applied .NET Attributes, is leading the full-day “Visual Studio 2019 In-depth” workshop at the upcoming “Visual Studio Live!” conference set for March 30 - April 3 in Austin, Texas.

The workshop begins with the premise that the capabilities and features within Visual Studio are vast, so much so that users may not be aware of everything that Visual Studio has to offer. In the full-day session, Bock will give a fast-paced tour of the Visual Studio landscape, including configuration, debugging, code analysis, unit testing, performance, metrics and more. Attendees will also find out about new features that are in Visual Studio 2019. By the end of this session, developers will have a solid understanding of Visual Studio so they can quickly develop reliable, maintainable solutions including:

- Understanding the vast Visual Studio ecosystem

- Gaining insights into Visual Studio analysis tools to improve applications

- Finding out how to extend Visual Studio with extensions and templates

Ahead of the workshop, we asked Bock about Visual Studio 2019 and he even shared his favorite little known feature with us.

Question: You have a full-day workshop deep-diving into Visual Studio 2019, so hopefully this isn’t too much of a spoiler, but what is your number #1 feature in VS2019 that you’re surprised more developers don’t know about?

Answer: There are a lot of features in Visual Studio, so even if you’ve used it for years, there’s always something new to find. One addition was the support of .editorconfig files, which defines the formatting styles for a project. If you have that file with a solution, VS will automatically honor those settings, so you no longer have to ensure VS is configured to match the formatting expectations.

Q: What do you think is the most powerful aspect of VS 2019 compared to other versions?

A: There are always new features added to VS, but to me, the biggest change for VS is the frequency of updates and revisions. In the old days, updates to VS were infrequent, service packs could completely invalidate an installation, and using preview versions could only be done within a virtual machine. You just couldn’t trust updates or preview versions. Now, with VS 2017 and 2019, the installation process is much cleaner, and preview versions are truly separated from the “main” version. It’s not perfect, but it’s a big improvement from the way things were.

Q: What is your personal favorite little-known or unknown feature?

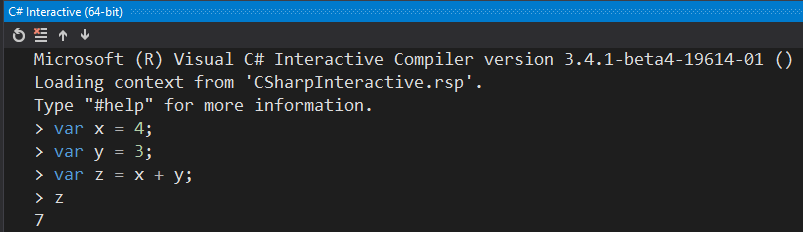

A: C# Interactive.  You can see that you can use C# as a scripting language within VS. It’s somewhat bare-bones in terms of the features it has, but it’s nice if you want to try out some C# code within VS outside of a solution.

You can see that you can use C# as a scripting language within VS. It’s somewhat bare-bones in terms of the features it has, but it’s nice if you want to try out some C# code within VS outside of a solution.

Q: What are your go-to Visual Studio tools?

A: I’m not a developer that uses a lot of tools in terms of extensions. I pretty much stick to what’s in VS, which turns out, there’s more there than people realize. The things I watch out for are new refactorings and key bindings with new versions of VS to enhance the coding experience.

Q: If we let you have one more extension, what would it be?

A: This may be surprising to some developers, but I stick to the tools that come with VS, and that typically is more than enough for what I do. I know there are some extensions that .NET developers insist you must use, but knowing what VS has out of the box, especially with recent versions, can be somewhat of an eye-opening experience.

Q: Anything else that we haven’t asked you that you wish we had?

A: The one thing I’d like to encourage developers is to get familiar with the key bindings and shortcuts within VS. The more you can keep your hands off the mouse, the faster you’ll be. Using Quick Help (Ctrl + Q) to discover features in VS along with any associated key bindings.

Posted by Richard Seeley on 02/21/20200 comments

Microsoft’s Cosmos isn’t a new database. It’s been around since 2010. But when coupled with Azure, its globally-distributed database in the cloud model seems ideal for business app developers in 2020.

“Cosmos DB enables you to build highly responsive and highly available applications worldwide,” touts Microsoft in its introduction to its NoSQL database. “Cosmos DB transparently replicates your data wherever your users are, so your users can interact with a replica of the data that is closest to them.”

The proximity of data to users is important because speed thrills when it comes to building business apps in the global mobile world. Users expect to see the information they need in a blink of an eye and that’s what Azure Cosmos provides.

“You can elastically scale throughput and storage, and take advantage of fast, single-digit-millisecond data access using your favorite API including SQL, MongoDB, Cassandra, Tables, or Gremlin,” Microsoft explains.

The elastic scalability of Azure Cosmos provides the flexibility business apps need where market demands can go up and down within hours, minutes or even seconds.

“You can elastically scale up from thousands to hundreds of millions of requests/sec around the globe, with a single API call and pay only for the throughput (and storage) you need,” according to Microsoft. “This capability helps you to deal with unexpected spikes in your workloads without having to over-provision for the peak.”

Retail and Marketing

Applications that can handle the peaks and valleys of the retail industry are among the use cases that Microsoft highlights for Azure Cosmos. Here the company eats its own dog food: “Azure Cosmos DB is used extensively in Microsoft's own e-commerce platforms that run the Windows Store and XBox Live.”

Beyond its own applications, Microsoft says Cosmos is designed to handle online retail functions such as storing catalog data and event sourcing in order processing pipelines:

“Catalog data usage scenarios involve storing and querying a set of attributes for entities such as people, places, and products. Some examples of catalog data are user accounts, product catalogs, IoT device registries, and bill of materials systems. Attributes for this data may vary and can change over time to fit application requirements.

“Consider an example of a product catalog for an automotive parts supplier. Every part may have its own attributes in addition to the common attributes that all parts share. Furthermore, attributes for a specific part can change the following year when a new model is released. Azure Cosmos DB supports flexible schemas and hierarchical data, and thus it is well suited for storing product catalog data.”

Cosmos Case Study

Microsoft backs up its claims for Azure Cosmos with case studies of the database in action from a number of prestigious customers.

One Microsoft customer story highlights how FUJIFILM employed Azure Cosmos database speed to enhance their customers’ experience. The Japanese company, which has transitioned from the photographic film business to become a leader in digital photography, wanted to improve digital photo management and file sharing for users of its IMAGE WORKS service. Photographers are typically anxious to see and share their work as instantly as possible. Using Azure Cosmos DB for its image file database, FUJIFILM was able to provide users with higher responsiveness and lower latency from IMAGE WORKS. Microsoft noted that response time for end users accelerated by a factor of 10 while some photographer interactions accelerated by 20 times and more over the previous database. With Azure Cosmos, a tree view photo display in image search processing, which included 140 tables and 1,000 rows of SQL queries, went from 45 seconds, which would seem like an eternity to impatient shutterbugs, to two seconds. “The more responsive we can make IMAGE WORKS, the more productive our customers can be,” said Yuki Chiba, Design Leader of the Advanced Solutions Group IMAGE WORKS Team at FUJIFILM Software.

Deep Dive on Cosmos DB

If you want to go beyond reading about it and really explore the Cosmos, Visual Studio Live! coming to Austin, TX March 30 through April 3 offers a full afternoon Deep Dive on Cosmos DB. Leonard Lobel, Microsoft MVP and CTO at Sleek Technologies, Inc., will lead developers on a journey through the Cosmos DB starting with an introduction including its multi-model capabilities which allow you to store and query schema-free JSON documents (using either SQL or MongoDB APIs), graphs (Gremlin API), and key/value entities (table API).

Diving deeper, in Part II, “Building Cosmos DB Applications,” you'll learn how to write apps for Cosmos DB, and see how to work with the various Cosmos DB APIs. These APIs support a variety of data models, including the SQL API (for JSON documents), Table API for (key-value entities), Gremlin API (for graphs) and Cassandra (for columnar). Regardless what you choose as a data model of choice, you'll learn how to provision throughput, and how to partition and globally distribute your data to deliver massive scale. You’ll come away knowing how to:

- Write code to build Cosmos DB applications

- Use the Cosmos DB server-side programming model to run stored procedures, triggers, and user-defined functions and explore the special version of SQL designed for querying Cosmos DB

- Use the Cosmos DB server-side programming model to run stored procedures, triggers, and user-defined functions

Sign up for the Visual Studio Live! Austin Code Trip today!

Posted by Richard Seeley on 02/13/20200 comments

What's SAFe? Are you doing Scrum? This might sound like dialogue outtakes from an old movie. But it is actually about alternative approaches to application development.

Scrum is sometimes shown in all caps as SCRUM but it is not an acronym. SAFe on the other hand stands for Scaled Agile Framework. Both have official websites: https://www.scrum.org/ and https://www.scaledagileframework.com/.

Both are approaches to Agile development. In the case of SAFe, Agile is its middle name. This whole thing started earlier in the decade when a group of developers published The Manifesto for Agile Software Development. The idea was to get away from older and cumbersome coding practices, which despite everybody's best intentions for detailed planning often started with little more than a manager saying: "I need you guys to write a program that [fill in the blank].

Because of heroic efforts by developers, that method usually worked, otherwise we would have had no applications to run since the personal computer revolution. And there was the Waterfall Method that organized development into steps starting with writing requirements (what a concept!) for the planned application. Then it went to phases of design, coding, QA, production and maintenance. Often those phases were handled by different teams with limited interaction or feedback. It worked more or less like an automobile assembly line. But it was rarely a collaborative process.

Agile is a collaborative process starting with a new emphasis on:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

There are 12 guiding principles to Agile that you can read about here. This is great but it's what MBA types call high level. You can see the whole battlefield from 10,000 feet. But what's going on in the trenches? On a day-to-day basis when you are trying to produce an app, what is everybody supposed to be doing? That's where SAFe and Scrum comes into play. They are essentially ways to actually do Agile development. They take different approaches, so you might ask: "What's the difference between SAFe and Scrum?"

Krishna Ramalingam gives basic answers to that question in a seven-minute YouTube video:

"Bottom line both are offshoots of Agile principles," he tells his audience. "Both talk about releasing in short iterations. The fundamental difference with SAFe is Scaled Agile Framework. Scrum focused on a smaller project unit and provides the framework on how this has to be handled."

With Scrum a team is working in iterations called sprints, Ramalingam explains. He uses the example of 10 teams working on 10 projects that will eventually come together as one product.

"All the 10 projects start and stop the sprint at the same time," he explains. "This way planning dependencies become easier and it also gives a view of what each of the teams can implement so as to deliver a bigger meaningful feature for the product level. So you align all the teams and you start sprinting."

A sprint might be three weeks and after that there would be a one week "hardening sprint" where the teams address issues that come up in the review. The advantage of this approach is that all the team members have an idea of what is going on with the other projects. It helps avoid that dreadful question: "Why did you do that?" With this kind of coordinated effort, ideally the 10 projects come together in one app.

"At the end of this you do a complete product level demo and promote the code to the production server and make a formal product release," Ramalingam explains.

But Scrum leaves some of the nitty gritty parts of the project up to the team members to figure out on their own, he adds. This is where SAFe offers more guidance. It covers things like "how budgeting should be done, the strategy and the governance under which the agile release trains are executed," he says. It offers more guidance on how 10 projects and be coordinated so they become one final application. Ramalingam sees this as something best suited for larger organizations.

"Should you follow Scrum or SAFe in your organization?" he asks at the conclusion of his video. "If you're starting out new in a small way or have independent small projects it would be best to implement Scrum. As your organization matures, you may eventually evolve practices that are similar to SAFe. If you have a large organization and want to go all out in your strategic planning down to the bottom level engineer, you may be better off starting with SAFe. There is no right or wrong answer. Understand the benefits and pitfalls of both the frameworks and choose wisely."

To achieve that level of understanding and make informed choices, you need an in-depth look at these frameworks. You can find out much more about both SAFe and Scrum at VS Live in Las Vegas, a six-day event running March 1 – 6. Learn about all the Code Trip here.

Posted by Richard Seeley on 12/12/20190 comments

Business Intelligence (BI), which like government intelligence sounds faintly like an oxymoron, has been around a long time. The earliest reference to business intelligence appeared in 1865, according to a Wikipedia article. In more modern times, the term started to appear at IBM in the late 1950s but Gartner is quoted as saying BI didn't gain traction in the corporate world until the 1990s. So it appears to be a term coined at the end of the Civil War that then moved into common usage with Decision Support Systems for data-based tactics and strategies, which were developed from 1965 to 1985. Any way you look at it BI is not new technology.

Fast forwarding to the present where massive amounts of data are being gathered from the huge surge in IoT devices and processed by increasingly sophisticated Artificial Intelligence software, the new term is Business Analytics. This is not your grandfather's punch cards being fed into water-cooled mainframe computers. It's not even business analysts working with Lotus 123 on MS-DOS PCs in 1985. Business Analytics is a whole new ballgame and if you want to play in the game, you may need to upgrade your skillset.

How Much Data Is Too Much?

With IoT and other edge computing systems collecting billions and billions of event and process data, some industry watchers are predicting a "Data-apocalypse" where there will be more information than cloud storage can handle or AI can analyze. But a more optimistic view is that the vast amounts of data being gathered globally present an unprecedented opportunity to give business decision makers a wealth of facts organized in applications for developing tactics and strategies. How are products really doing in the marketplace? Are the processes that create those products as efficient and safe as they could be? Who actually wants to buy what you are selling? Business analytics offers the potential to greatly reduce the guesswork that amounted to little more than a coin flip in old time decision making.

From Theory to Applications

Of course, this is not going to magically appear on business users desktops. Applications need to be built to meet the specific business analytics needs of individual companies, departments and groups. There are tools developers can use to get those jobs done, including Microsoft Data Platform technologies such as SQL Server, Azure SQL Server, and even good old Power BI.

There is news this month on updates to some of the Microsoft technologies specifically to help handle big data.

On Nov. 5, Azure Data Studio was released which is designed to provide multi-database, cross-platform desktop environment for data professionals using the family of on-premises and cloud data platforms on Windows, MacOS, and Linux. More information about it is available on this GitHub page.

The recently released SQL Server 2019 (15.x), SQL Server Big data Clusters is designed to "allow you to deploy scalable clusters of SQL Server, Spark, and HDFS containers running on Kubernetes," as explained in a Microsoft post What are SQL Server Big Data Clusters? "These components are running side by side to enable you to read, write, and process big data from Transact-SQL or Spark, allowing you to easily combine and analyze your high-value relational data with high-volume big data. SQL Server Big Data Clusters provide flexibility in how you interact with your big data ... You can query external data sources, store big data in HDFS managed by SQL Server, or query data from multiple external data sources through the cluster. You can then use the data for AI, machine learning, and other analysis tasks."

That Microsoft post provides scenarios for making use of big data for analysis tasks utilizing AI and machine learning.

Get Up to Speed with an Expert

If you are interested in enhancing your Business Analytics skill set including gaining greater knowledge of the Microsoft Data Platform technologies such as SQL Server, Azure SQL Server, and more, there's a workshop for you this month at Visual Studio Live! From Business Intelligence to Business Analytics with the Microsoft Data Platform is an all-day workshop that is part of Visual Studio Live! in Orlando, Florida, Nov. 17 -22. It is being taught by big data expert Jen Stirrup, who is a Microsoft Data Platform MVP.

This workshop will cover real-life scenarios with takeaways that you can apply as soon as you go back to your company.

Here are the topics included:

- Introduction to Analytics with the Microsoft Data Platform

- Essential Business Statistics for Analytics Success: the important statistics that business users use often in business spheres, such as marketing and strategy.

- Business Analytics for your CEO – what information does your CEO really care about, and how can you produce the analytics that she really wants? In this session, we will go through common calculations and discuss how these can be used for business strategy, along with their interpretation.

- Analytics for Marketing – what numbers do they need, why, and what do they say? In this session, we will look at common marketing scenarios for analytics, and how they can be implemented with the Microsoft Data Platform.

- Analytics for Sales – what numbers do they need on a sales dashboard, why, and what do they say? In this session, we will look at common sales scenarios for analytics such as forecasting and 'what if' scenarios, and how they can be implemented with the Microsoft Data Platform.

- Analytics with Python – When you really have difficult data to crunch, Python is your secret Power tool.

- Business Analytics with Big Data – let's look at big data sources and how we can do big data analytics with tools in Microsoft's Data Platform.

You can find out more about Visual Studio Live! in Orlando here.

Posted by Richard Seeley on 11/20/20190 comments

Any developer can get into building Artificial Intelligence apps with Microsoft Cognitive Services.

Just as in the pioneering days of ecommerce, you could build an online retail store app by dropping in a shopping cart web service, Microsoft wants to empower you to create AI apps by adding pre-built cognitive services.

Suddenly your next app can include machine learning capabilities for speech recognition, text analysis, image recognition, and decision support. The best part is you don't have to be an AI guru or a data scientist to build that app. That is the democratizion of AI that Microsoft is hyping.

As the company says in a recent post explaining Cognitive Services and machine learning: "You don't need special machine learning or data science knowledge to use these services."

If you know how to work with REST and APIs you're good to go as a Microsoft blog defining cognitive services states:

"Cognitive Services are a set of machine learning algorithms that Microsoft has developed to solve problems in the field of Artificial Intelligence (AI). The goal of Cognitive Services is to democratize AI by packaging it into discrete components that are easy for developers to use in their own apps. Web and Universal Windows Platform developers can consume these algorithms through standard REST calls over the Internet to the Cognitive Services APIs."

Applications: Meet LUIS

All this data science abstraction is interesting but how does it work in a real world app? Microsoft has examples, such as its cognitive service called LUIS explained here:

Language Understanding (LUIS) is a cloud-based API service that applies custom machine-learning intelligence to a user's conversational, natural language text to predict overall meaning, and pull out relevant, detailed information.

A client application for LUIS is any conversational application that communicates with a user in natural language to complete a task. Examples of client applications include social media apps, chat bots, and speech-enabled desktop applications.

Welcome to Cognitive Computing

Apps like a LUIS-powered chat bot fall into the brave new world of cognitive computing, which Wikipedia defines in this article.

"Cognitive computing (CC) describes technology platforms that, broadly speaking, are based on the scientific disciplines of artificial intelligence and signal processing. These platforms encompass machine learning, reasoning, natural language processing, speech recognition and vision (object recognition), human–computer interaction, dialog and narrative generation, among other technologies."

As the article goes on to explain, cognitive computing is basically AI technologies being used to act like a human in interactions with actual humans. LUIS will help you create a chat bot to talk to visitors to your website or online retail store.

Microsoft Content Moderator is a service that acts like a human editor checking to make sure content posted to your site does not contain offensive material. The company envisions lots of jobs for this cognitive service including:

- Online marketplaces that moderate product catalogs and other user-generated content.

- Gaming companies that moderate user-generated game artifacts and chat rooms.

- Social messaging platforms that moderate images, text, and videos added by their users.

- Enterprise media companies that implement centralized moderation for their content.

- K-12 education solution providers filtering out content that is inappropriate for students and educators.

Considering all the news and controversy surrounding online postings, working with this technology may be a hot zone.

Cognitive Service is different from machine learning

But before you start featuring yourself as a machine learning aficionado, Microsoft hastens to add that there are differences between ML and its cognitive services offering.

"A Cognitive Service provides a trained model for you," the company states. "This brings data and an algorithm together, available from a REST API(s) or SDK. You can implement this service within minutes, depending on your scenario. A Cognitive Service provides answers to general problems such as key phrases in text or item identification in images."

If you're going to get into ML, you are going to need to up your AI and data science skill levels.

As Microsoft differentiates it: "Machine learning is a process that generally requires a longer period of time to implement successfully. This time is spent on data collection, cleaning, transformation, algorithm selection, model training, and deployment to get to the same level of functionality provided by a Cognitive Service. With machine learning, it is possible to provide answers to highly specialized and/or specific problems. Machine learning problems require familiarity with the specific subject matter and data of the problem under consideration, as well as expertise in data science."

Okay, so it's back to the classroom if you want to learn to do all that stuff.

Learn AI Application Development

At Visual Studio Live in Orlando FL, Nov. 17-22, 2019, there is a track where you can Learn AI Application Development.

"Artificial Intelligence is changing how you build apps," states the course description. "Technologies like advanced machine learning, natural language processing, and more, mean you can build truly 'smart apps'. With today's accelerated development cycles, you need to be ready to build the next generation of self-learning applications. In this track, you'll find talks on interacting with intelligent agents like Alexa and Google Assistant, Microsoft's Cognitive Services, Azure Machine Learning, Databricks and DevOps for AI."

Find out about all the tracks at Visual Studio Live! in Orlando here.

Posted by Richard Seeley on 10/16/20190 comments

If you are developing business to consumer applications but you're not an expert in configuring secure identity management, Microsoft says it can provide everything you need with its Azure Active Directory (AD) Business to Consumer (B2C) identity offering.

AD B2C will do all the heavy lifting when it comes to things like third-party authentication, Single Sign On (SSO) and even protection against Denial of Service and brute force password attacks, the Redmond-based software company says.

To show how easy it is for developers to use, Microsoft produced a YouTube video titled What is Azure Active Directory B2C? In the video, posted this month, Adam Stoffel, Senior Product Manager Azure AD, makes a bold statement: "Microsoft will act as the secure front door to any of these applications and we'll worry about the safety and scalability of the authentication platform."

Azure AD B2C is what Stoffel calls "a white label authentication solution," which developers can customize with the look-and-feel of their web or mobile app with a little HTML, CSS, or JavaScript programing. While B2C, as the Microsoft people refer to this product, is busy in the backend making sure the customers logging in are who they say they are and aren't bad guys, your customers will feel they are interacting with your company. For their convenience, your customers can use their social identities, including Twitter, Facebook and LinkedIn, to sign up and sign on. B2C uses authentication protocols including OpenID Connect, OAuth2, and XAML and can integrate with off-the-shelf software packages.

"B2C can also centralize collection of user profiles and preference information and capture detailed analytics information about behavior and sign up conversion," Stoffel explains. "By serving as central authentication authority for all your applications, B2C provides you with a way to build a single sign on for any API, web or mobile application. We will handle things like denial of service, password spray and brute force attacks, so you can focus on your core business and stay out of the identity business."

Microsoft is clearly assuming most B2C developers want to stay out of the identity business.

The video includes a demo of Azure AD B2C doing its stuff for an online grocery store. It shows how signing up and signing on looks to the user. It also shows how the user profiles can be customized. Say you are allergic to peanuts. The application will flag any product with ingredients that might impact your allergy.

Read All About It

For those developers who actually want to read documentation rather than watch how-to videos. Microsoft has some detail instructions on using Azure AD B2C.

There is actually a written version of What is Azure Active Directory B2C? that is a little more technical and freer of marketing hype. The authors, a committee of Microsoft techies, aim this documentation at all levels of expertise.

"If you're an application developer with or without identity expertise, you might choose to define common identity user flows using the Azure portal," they explain. "If you are an identity professional, systems integrator, consultant, or on an in-house identity team, are comfortable with OpenID Connect flows, and understand identity providers and claims-based authentication, you might choose XML-based custom policies."

This doc covers:

- Protocols and tokens

- Tenants and applications

- User journeys

- Identity providers

- Page customization

- Developer resources

This doc takes about eight minutes to read if you don't move your lips.

There are also links in it to more documentation and tutorials.

Up Close and Personal Training

If your preference runs to hands-on learning rather than watching videos or reading copious amounts of documentation, VS Live! in Chicago, Oct. 6 – 10, offers Reach Any User on Any Platform with Azure AD B2C. Nick Pinheiro, Microsoft Cloud & Software Architect, will be the instructor for this session aimed at the introductory to Intermediate level.

"In this session," the description says, "you will learn how to allow users to login to your web or mobile apps with their social and consumer identities or email address with Azure AD B2C. Technologies include: Azure AD B2C, Azure App Service, API Apps, Xamarin and more."

It will cover:

- Enabling and configuring Azure AD's Business to Consumer (B2C) identity offering

- Using the Microsoft Graph API to access the user data in Azure AD B2C

- Integrating your existing app identity with Azure AD B2C

Posted by Richard Seeley on 09/19/20190 comments

Full stack web developers are in great demand with attractive salaries being dangled from job search sites.

Is this job for you?

If you've heard about this employment category and are intrigued by the possibilities, you still might want to look before you leap.

To start with: What exactly are we talking about when we talk about full stack web development?

It is a little like having access to all the rooms in the mansion. As a full stack web developer, you know how to work in the front rooms and the back rooms and you have access to all the connecting hallways and rooms in between.

The upcoming VSLive! in Chicago has a learning experience that is a full track on the full stack. Here's how it's described:

Full Stack Web Development

Web development has become the dominant approach for building enterprise software. To provide your best on every project, you need to understand the server and browser client worlds. On the server there's ASP.NET Core with MVC, the new Razor Pages, and support for building services. The browser client is evolving rapidly, with constant changes and updates to JavaScript, TypeScript, Angular, React, and other related frameworks ...

Topics in this track include:

- ASP.NET Core intro and deep dive

- Getting started with Razor Pages

- Angular 101

- Managing async data in Angular

- Designing advanced Angular components

- Advanced Azure App Services

- Advanced TypeScript

So there you have the components of what you need to know to get started in the full stack world. There's a lot going on in the Microsoft world with ASP.NET Core as well as Azure, Razor, Angular and Typescript. Are you up to date on these core technologies? Here is the latest news.

Can You Say Azure?

Since Oct. 2008 when Microsoft announced its cloud computing service, Azure has been the most mispronounced product name in history. Do a search on how to say Azure and many variations appear. The American pronunciation is said to be a.zhr. But a lot of computer industry analysts seem bent on outdoing each other in coming up with different ways to say it. Originally, before Microsoft presumably took the name from an RGB color wheel, azure was a variation of the clear blue sky. Maybe that sky had a fluffy white cloud, hence the Microsoft naming logic.

Anyway onward, Microsoft Azure is a big deal in the computing cloud world and full stack web developers need to know about it. Blockchain, which is big news everywhere, was one of the big news previews highlighted this summer at the Microsoft Build developers conference, as Paul Schnackenburg explained in an in-depth Virtualization & Cloud Review article, A Look at the New Azure Releases & Improvements Revealed at Build 2019. There's a preview of Azure Blockchain Service providing point-and-click creation of fully managed networks with management and governance built-in. "There's also an Azure Blockchain Development Kit," he writes, "which brings connectors for Flow and LogicApps, another move to make adoption of this complex technology easier for non-experts. And the plug-in for Visual Studio Code is going to simplify development."

What's New for ASP.NET Core

With .NET Core 3.0 becoming production-ready, Microsoft is focusing on new features for ASP.NET including top level ASP.NET Core templates in Visual Studio: "The ASP.NET Core templates now show up as top level templates in Visual Studio in the 'Create a new project' dialog," Microsoft's Daniel Roth explained in a July Visual Studio Magazine article. What's New for ASP.NET Core offers summaries of the new features.

Working with Razor and Blazor

If you want to start working on the frontend, Visual Studio Magazine offers a handy how-to guide with code samples. In THE PRACTICAL CLIENT: How to Dynamically Build the UI in Blazor Components by Peter Vogel, he explains: "You have two tools for generating your initial UI in a Blazor component: ASP.NET's Razor and Blazor's RenderFragment." He shows how to use both to integrate with your C# code and also offers a caveat about what you can't do.

Angular 8 Grows Ivy

If you are angling for a full stack web developer position, you need to know your Angular. As a Wikipedia article explains: "Angular is a TypeScript-based open-source web application framework led by the Angular Team at Google and by a community of individuals and corporations." The latest version, Angular 8, was released this past May but the article notes that Google has promised new upgrades twice a year. The future of Angular is Ivy, which is a "backwards compatible, completely new render engine based on the incremental DOM architecture. Ivy has been engineered with tree shaking in mind, which means that application bundles will only include the parts of Angular source code that is actually used by the application." Angular 8 has an opt-in preview of Ivy with these features:

Generated code that is easier to read and debug at runtime.

- Faster re-build time.

- Improved payload size.

- Improved template type checking.

- Backwards compatibility

As an open source project there are lots of websites for Angular info including https://opensource.google.com/projects/angular, and https://github.com/topics/angular.

Pop Goes the Typescript

"Microsoft created the open source TypeScript language in 2012 and it has climbed steadily in popularity thanks to its unique scheme of providing optional static typing for JavaScript-based coding, among other features," explains David Ramel, editor of Visual Studio Magazine, in a recent article, TypeScript Cracks Top 10 in Programming Language Popularity Ranking. In another article on the latest TypeScript 3.6, the open source project's program manager Daniel Rosenwasser covers the latest features with links so you can take a deep dive into what it all means.

Get on Track for Full Stack

This blog offers a peek at what you need to know to be a full stack web developer. If you want to dive deep, sign up for VSLive! Chicago.

Posted by Richard Seeley on 08/27/20190 comments

If you are working with earlier versions of plain old ASP.NET, but need to upgrade your skills for Microsoft Azure DevOps, you may be looking to get up to speed on its smarter brother, ASP.NET Core, which is growing in popularity with the developer community.

While C# is the "most loved" programming language, according to a recent survey by developer tooling specialist JetBrains. There is growing regard for .NET Core, according to a recent Visual Studio Magazine article. "… the survey indicates Microsoft's new open source, cross-platform 'Core' direction is gaining traction but still has a long way to go as it usurps the ageing, Windows-only .NET Framework, with .NET Core and ASP.NET Core leading the migration," the article noted.

ASP.NET Core is "a complete rewrite that unites the previously separate ASP.NET MVC and ASP.NET Web API into a single programming model," according to a Wikipedia article. "ASP.NET Core applications support side by side versioning in which different applications, running on the same machine, can target different versions of ASP.NET Core. This is not possible with previous versions of ASP.NET."

Microsoft sought to distance the new framework from the older versions of ASP.NET. The company didn't want it to be thought of as simply an update, so the working title, ASP.NET 5 was changed to ASP.NET Core 1.0 for its 2016 release to highlight its status as a brand new product.

Microsoft has recently published an Introduction to ASP .NET Core that covers what developers need to know about the framework for building cloud-based, Internet-connected applications. It touts the framework's advantages including:

- Build web apps and services, IoT apps, and mobile backends.

- Use your favorite development tools on Windows, macOS, and Linux.

- Deploy to the cloud or on-premises.

- Run on .NET Core or .NET Framework.

Why ASP .NET Core?

Microsoft is aiming at ASP.NET 4.x developers, said to number in the millions, touting ASP .NET Core's ability to integrates seamlessly with popular client-side frameworks and libraries, including Blazor, Angular, React, and Bootstrap. The company also points out that the new framework provides benefits including:

Beyond explaining what the framework can do, the Microsoft tutorial page offers developers a step-by-step "learning path" with code samples for a basic app to show how it works.

Would you rather see how it's done rather than reading the instructions? There is a fun and informative one-hour YouTube video featuring Daniel Roth, program manager on Microsoft's ASP.NET team, covering "Full stack web development with ASP.NET Core 3.0 and Blazor." The highly entertaining host shows an enthusiastic live audience how to build a pizza store web app.

If videos are your thing, Microsoft's ASP .NET Community Standup site offers scheduled tutorials that you can watch live. All the past live events, several of them featuring the irrepressible Roth, are available for replay. Recent episodes also included the June ASP.NET Core 3.0 Preview 6 Release Party.

Hands-on Training for Building a Modern DevOps Pipeline

If you are looking for in-person hands-on training, ASP .NET Core will be in the spotlight on Sept. 29, at VS Live! San Diego. There will be a full-day hands-on lab: Building a Modern DevOps Pipeline on Microsoft Azure with ASP.NET Core and Azure DevOps.

Attendees will come away with an ASP.NET Core app and a SQL Server Database running in Azure with a full continuous integration / continuous deployment (CI/CD) pipeline managed by Azure DevOps.

The instructors will begin with a review of the current thinking on DevOps. Next will be the planning and tracking phase where the architecture of the app will be broken out and defined. Then the dev & test phase where attendees get feature flags implemented, CI builds working, manual and automated tests, and more. In the release phase, you will learn how to create a deployment pipeline to multiple environments and how to validate a deployment after its release using Azure App Services (both web apps and containers). Finally, the monitor and learn phase will cover analytics and user feedback and how you start the cycle over again.

By the end of the day, you will have a CI/CD pipeline configured, a deployed app, and the hands-on experience on how to build a modern ASP.NET Core and SQL Database solution that runs in Azure using Azure DevOps.

You can find out more and register here.

Posted by Richard Seeley on 07/19/20190 comments