Microservices design patterns are software design patterns that generates reusable autonomous services. The goal for developers using microservices is to accelerate application releases. By using microservices, developers can deploy each individual microservice independently, if desired.

They have their strengths and drawbacks (thankfully more strengths) and there are many more examples of when they are appropriate to use than not. Here are some listings of top microservices and their use cases we found in the blog-iverse.

This post is a nice fundamental rundown of some of the basic microservice design patterns. It’s a little older, but still germane. Post author Arun Gupta writes, "The main characteristics of a microservices-based application are defined in Microservices, Monoliths, and NoOps. They are functional decomposition or domain-driven design, well-defined interfaces, explicitly published interface, single responsibility principle, and potentially polyglot. Each service is fully autonomous and full-stack. Thus changing a service implementation has no impact to other services as they communicate using well-defined interfaces. There are several advantages of such an application, but it’s not a free lunch and requires a significant effort in NoOps."

Then Gupta lists several microservices design patterns he has found tried and true over the years:

"Aggregator Microservice Design Pattern: The first, and probably the most common, is the aggregator microservice design pattern. In its simplest form, Aggregator would be a simple web page that invokes multiple services to achieve the functionality required by the application.

Proxy Microservice Design Pattern: Proxy microservice design pattern is a variation of Aggregator. In this case, no aggregation needs to happen on the client but a different microservice may be invoked based upon the business need. Just like Aggregator, Proxy can scale independently on X-axis and Z-axis as well. You may like to do this where each individual service need not be exposed to the consumer and should instead go through an interface.

Chained Microservice Design Pattern: Chained microservice design pattern produce a single consolidated response to the request. In this case, the request from the client is received by Service A, which is then communicating with Service B, which in turn may be communicating with Service C. All the services are likely using a synchronous HTTP request/response messaging.

Branch Microservice Design Pattern: Branch microservice design pattern extends Aggregator design pattern and allows simultaneous response processing from two, likely mutually exclusive, chains of microservices. This pattern can also be used to call different chains, or a single chain, based upon the business needs.

Shared Data Microservice Design Pattern: One of the design principles of microservice is autonomy. That means the service is full-stack and has control of all the components – UI, middleware, persistence, transaction. This allows the service to be polyglot, and use the right tool for the right job. For example, if a NoSQL data store can be used if that is more appropriate instead of jamming that data in a SQL database.

Asynchronous Messaging Microservice Design Pattern: While REST design pattern is quite prevalent, and well understood, but it has the limitation of being synchronous, and thus blocking. Asynchrony can be achieved but that is done in an application specific way. Some microservice architectures may elect to use message queues instead of REST request/response because of that."

He goes into greater detail in his post. Check it out if you’re looking for a good primer on microservices design patterns.

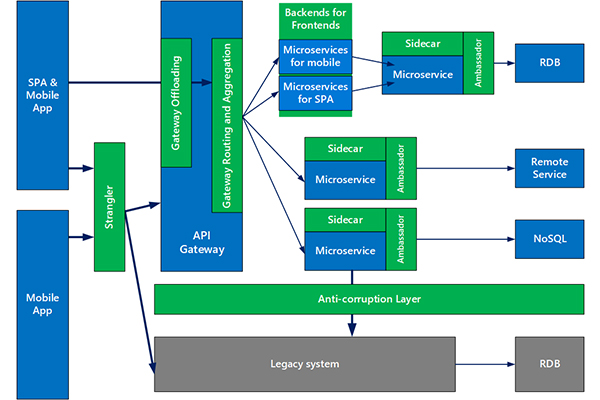

Naturally the Azure team at Microsoft has just published nine new microservices design patterns. Mike Wasson, lead content developer for AzureCAT patterns and practices, describes the new offerings from the Azure team in this recent post.

The AzureCAT patterns & practices team has published nine new design patterns on the Azure Architecture Center. These nine patterns are particularly useful when designing and implementing microservices. The increased interest in microservices within the industry was the motivation for documenting these patterns. For each pattern, we describe the problem, the solution, when to use the pattern, and implementation considerations. Here are the new patterns:

Ambassador can be used to offload common client connectivity tasks such as monitoring, logging, routing, and security (such as TLS) in a language agnostic way.

Anti-corruption layer implements a façade between new and legacy applications, to ensure that the design of a new application is not limited by dependencies on legacy systems.

Backends for Frontends creates separate backend services for different types of clients, such as desktop and mobile. That way, a single backend service doesn't need to handle the conflicting requirements of various client types. This pattern can help keep each microservice simple, by separating client-specific concerns.

Bulkhead isolates critical resources, such as connection pool, memory, and CPU, for each workload or service. By using bulkheads, a single workload (or service) can’t consume all of the resources, starving others. This pattern increases the resiliency of the system by preventing cascading failures caused by one service.

Gateway Aggregation aggregates requests to multiple individual microservices into a single request, reducing chattiness between consumers and services.

Gateway Offloading enables each microservice to offload shared service functionality, such as the use of SSL certificates, to an API gateway.

Gateway Routing routes requests to multiple microservices using a single endpoint, so that consumers don't need to manage many separate endpoints.

Sidecar deploys helper components of an application as a separate container or process to provide isolation and encapsulation.

Strangler supports incremental migration by gradually replacing specific pieces of functionality with new services."

Wasson concludes with his own take on the whys and hows of microservices design patterns. "The goal of microservices is to increase the velocity of application releases, by decomposing the application into small autonomous services that can be deployed independently. A microservices architecture also brings some challenges, and these patterns can help mitigate these challenges."

Posted by Lafe Low on 02/28/20180 comments

Hey there everyone, Lafe here again. And once again, every month or so my fellow Visual Studio Live! blogger Rich Seeley will present a more technical approach to these blog posts. His latest post details some of the clamoring and confusion going on around C#, most notably that C# fans are being left out in the cold when it comes to AI.

What's up with C#?

In a word: Lots.

There's been plenty of news over on https://visualstudiomagazine.com/home.aspx.

Some of the news has been about new features for C#. But one of the big stories is about C# coders being left out of Microsoft’s Artificial Intelligence game. In .NET Coders Clamor for C# Support in Microsoft's CNTK AI Toolkit David Ramel, editor of Visual Studio Magazine, uncovered unrest in posts on the Issues section of the Microsoft Cognitive Toolkit (CNTK) GitHub repository for the open source toolkit for commercial-grade distributed deep learning.

While CNTK supports Python, the current AI darling, as well as BrainScript, .NET developers are wondering why more traditional .NET languages like C#, Visual Basic and F# are not included.

Here's some of what Ramel found in posts on the GitHub site where a developer with the handle nikosdim1 wrote a post titled "C# and .NET support barking," highlighting the lack of C# support:

"Strangely, though, it seems that we have to abandon C#/VB/F# and learn python because CNTK is more supported in this language (OK C++ too but this is not a 'clean' .net language) maybe because other frameworks rely more on Python."

In the opinion of this poster it seems .NET developers are being told not to use C# or Visual Basic or F# because Microsoft doesn’t seem to fully support them.

Another developer posted: "I'm eager to finally work with deep learning on Windows with C# instead of on Linux with Python."

A poster who appeared to be with Microsoft tried to reassure the developers that support for C# and the other traditional languages is in the works but added there is no timeframe.

Microsoft is famously closed mouthed about these issues. But when news breaks, you’ll find it on VisualStudiomagazine.com.

Better news for C# developers is Microsoft’s commitment to speeding updates.

In Microsoft Quickens C# Release Cadence, Unveils v7.1, Ramel told readers: "Mads Torgersen, lead designer for C#, said the team is moving to 'point releases' to keep up with associated tooling -- like Visual Studio -- and the underlying .NET Framework itself that are shipped more frequently. This, he said, brings new features to developers in a trickle so they can take advantage of them sooner."

In January, Amazon Web Services also had good news for C# developers as reported in AWS Cloud Adds .NET Core 2.0 Support for C# Coding of Lambda Functions. AWS announced support for .NET developers using C# to write Lambda functions “while leveraging .NET Core 2.0 libraries.” Lambda is the foundation of Amazon's “serverless computing” service.

To learn more about the latest features in C#, read this Visual Studio magazine article about a presentation at a recent Visual Studio Live! conference with Microsoft's Adam Tuliper. C# developers with an interest in tuples will find a lot here. It's the next best thing to attending Visual Studio Live! where you would have actually had a chance to chat with Tuliper, as is the case with all of our presenters.

If you are really looking for a deep dive into C#, go to Analysing C# code on GitHub with BigQuery by London-based Microsoft MVP Matt Warren. As he explains: "...in this post I am going to be looking at all the C# source code on GitHub and what we can find out from it." He found answers to both controversial and more prosaic C# questions ranging from "Tabs or Spaces?" to "How many lines of code (LOC) are in a typical C# file?" If you're curious about all things C# this is a great read.

Want to keep up with C# happenings and do more than just read about it but possibly make suggestions for feature and bug fixes? Visit GitHub's C# Language Design page, "the official repo for C# language design." It welcomes visitors and explains: "This is where new C# language features are developed, adopted and specified. C# is designed by the C# Language Design Team (LDT) in close coordination with the Roslyn project, which implements the language."

Among the things you'll find on the site are:

- Active C# language feature proposals

- C# language design meetings

- C# language specifications

- Summary of the language version history

For those who want to get involved, the Design Team suggests: "If you discover bugs or deficiencies in the above, please leave an issue to raise them, or even better: a pull request to fix them."

Finally, here's a question where there doesn't seem to be an answer. C# predates Twitter with its famous hashtags. But in the 21st century wouldn’t it be easier to rename the language Csharp since that's what you have to do when you post to Twitter or create a URL on the web?

Posted by Lafe Low on 02/15/20180 comments

In this corner, we have waterfall. Long the champion of the sequential approach through the typical development phases such as design, development, testing, deployment, and maintenance. Developers work through each phase in order, like a "waterfall" cascading down over the rocks.

And in this corner, we have agile. Sometimes you'll see it as big Agile with a capital A, other times as little agile with a lowercase a. Agile is certainly a livelier approach, taking an adaptive and flexible role in the planning, development, delivery, and continuous updating stages. Instead of doing one phase at a time, teams work on all phases, all the time.

So is it one or the other? Should you use the older waterfall approach or the more adaptive agile approach? As you’ll see from some of the expert analysis we've gleaned from the blogosphere, the prevailing attitudes and preferences lean heavily toward agile.

Every development project is different. Every client—even internal clients—have different requirements. Few get this concept as well as the folks at The Digital Project Manager. This site has a deep, profound analysis to help you determine the best approach for particular projects, not really taking a side, but providing thoughtful explanation of the determining factors. Deeper into her analysis, the writer Suzanna Haworth dives right in:

"What should influence your decision on which methodology to apply to a project? Until you know what the project is and other influencing factors, you’re not going to be able to decide which is best suited. Some (or all) of these factors should be taken into account when looking at methodologies:

- Size of the project

- Duration

- Complexity

- Organizational factors

- Clients or stakeholders, external and internal

What projects can Waterfall be suited to? Waterfall projects are:

- Projects where you’re working with other organizations or remote workers

- Projects with a fixed scope, time and budget

- Smaller, well-defined and simpler projects

- Projects with an absent client.

- What projects can Agile be suited to?

Agile projects are:

- Projects where your organization is responsible for the whole process

- Projects with scope for changing requirements

- Larger, undefined, complex projects

- Projects with an involved client.

But do organizations really take into account all of these factors? What, or who, actually decides a process? Possibly the main thing that influences a chosen methodology is existing processes. Your organization’s current processes are likely to determine how you run your project—whatever that project is. It’s the classic one-size-fits-all approach. Given that each project has different needs, and can differ quite widely in influencing factors, this isn’t the best way to run every project at your organization."

At this point, Haworth admits she has been sitting on the fence, but then she does have to agree agile is better suited for modern digital projects. "Both methodologies...have benefits in their own right. But if we're living in an ideal world, the Agile approach does suit pure digital projects more. All the principles do seem to fit the digital landscape, where evolving needs and change are present throughout all projects." There you have it.

Approaching each step in the development phase individually has it benefits and its drawbacks. This affects how to deal with mistakes and subsequent corrections, and how quickly you can resolve them or expensive it becomes to do so. Agile Nutshell gives us a concise, graphic overview of how these phases flow from one to the other, and how to best navigate them with both methodologies. Post author Jonathan Rasmusson takes us through the grid:

"Traditional waterfall treats analysis, design, coding, and testing as discrete phases in a software project. This worked ok when the cost of change was high. But now that it's low it hurts us in a couple of ways.

- Poor quality: First off, when the project starts to run out of time and money, testing is the only phase left. This means good projects are forced to cut testing short and quality suffers.

- Poor visibility: Secondly, because working software isn't produced until the end of the project, you never really know where you are on a Waterfall project. That last 20 percent of the project always seems to take 80 percent of the time.

- Too risky: Thirdly you've got schedule risk because you never know if you are going to make it until the end. You've got technical risk because you don't actually get to test your design or architecture until late in the project. And you've got product risk because don't even know if you are building the right until it's too late to make any changes.

- Can't handle change: And finally, most importantly, it's just not a great way for handling change.

Instead of treating these as fixed stages, Agilists believe these are continuous activities. By doing them continuously:

- Quality improves because testing starts from day one.

- Visibility improves because you are 1/2 way through the project when you have built 1/2 the features.

- Risk is reduced because you are getting feedback early.

- Customers are happy because they can make changes without paying exorbitant costs."

Clearly, Agile Nutshell is a fan of agile over waterfall, and with that compelling argument it's difficult to disagree.

You would expect to find some sage advice on choosing the best development methodology from a place like the Project Management Institute. And indeed, the PMI site does not disappoint. This session was presented at a conference in 2012 but is still germane today, focusing on selecting a development methodology best suited for large-scale ERP projects:

- "Have you ever wondered how to deliver your project quicker and realize business value sooner from your ERP implementations?

- Have you ever wondered how to remove all of the "ceremony" of the implementation process and the "waste" involved with an ERP implementation while preserving the integrity of the delivered ERP package methodology?

- Have you ever wondered if there was a way to be flexible enough during ERP implementations, and that changing business requirements could be provided to a project team mid-implementation, without significantly affecting the overall cost and schedule?

- Have you ever wondered how to improve the efficiency and productivity of your existing ERP teams by doing 'more with less' in today's economy?

These are common questions that are asked when discussing how to improve the implementation of ERP projects. Consideration of Lean concepts and agile techniques to accelerate the time to delivery and the realization of benefits is becoming more prevalent. Project managers are challenging the ways they have been traditionally implementing projects and are seeking new approaches. However, there are many considerations in determining the right approach."

Check out the full presentation, in which the authors discuss the key determining factors to consider when deciding whether a particular project is better suited for agile or waterfall.

Posted by Lafe Low on 01/30/20180 comments

Howdy readers, Lafe here. About once a month, my partner in crime, Rich Seeley, has been doing technical takeovers of this blog. Here's his latest post, which goes over the fundamentals of implementing Scrum using Visual Studio.

Even if you are new to Scrum you probably know that basically it's an agile programming methodology that can be used with Visual Studio. In keeping with the agile philosophy, Scrum is the opposite of the old step-by-step "waterfall" approach to application development where there is a lengthy product planning stage, then the programming, then the testing, and if everything works perfectly, which it almost never does, deploying. The waterfall approach, which dates back to an era where IT had huge water cooled mainframes running in air conditioned computer rooms, is not likely to work well where businesses need mobile apps for the immediate gratification of consumers who may change their minds daily if not hourly.

"A key principle of Scrum is the dual recognition that customers will change their minds about what they want or need (often called requirements volatility) and that there will be unpredictable challenges—for which a predictive or planned approach is not suited," a Wikipedia article explains.

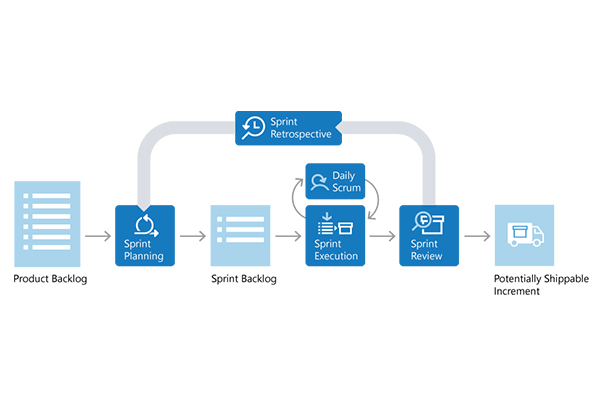

Scrum breaks development down to smaller steps. The planning stage, which could take months in waterfall, is minimized to quickly come up with basic requirements. "We need an app for impulse buyers so they can view and buy our featured smart gadget quickly and easily."

From there you go to the build stage where your team of developers creates an app to do that.

Testing comes next and if the app passes review, you might be able to deploy it.

Of course, there's likely to be requirements volatility as sales and marketing people realize the app needs to be changed. In old school development this could be a crisis. But with Scrum it just creates another step in the ongoing application development process.

The Scrum team just moves on with the new requirements to another incremental stage of planning, building, testing and review, as consultant Steve Stedman, explains in a very short, animated and entertaining YouTube video Introduction to Scrum in 7 Minutes. These incremental stages are called Sprints in Scrum terminology and usually take one to three weeks, Stedman explains. Sprints are repeated until the app is "feature complete," he says.

This is all part of what the Wikipedia article calls "an evidence-based empirical approach—accepting that the problem cannot be fully understood or defined up front, and instead focusing on how to maximize the team's ability to deliver quickly, to respond to emerging requirements, and to adapt to evolving technologies and changes in market conditions."

Once you understand the basic concepts, a place to go for in-depth information is the "Home of Scrum," Scrum.org, which "provides comprehensive training, assessments and certifications to improve the profession of software delivery." The site offers:

- Resources to Learn About Scrum

- Professional Scrum Training

- Professional Scrum Certifications

For those using Visual Studio for Scrum development, Microsoft offers specifics with special focus on the team concept.

What is Scrum? by Microsoft's Gregg Boer defines the three roles team members play in the development process:

Product Owner

Responsible for what the team is building, and why they're building it. The product owner is responsible for keeping the backlog up-to-date and in priority order.

Scrum Master

Responsible to ensure the scrum process is followed by the team. Scrum masters are continually on the lookout for how the team can improve, while also resolving impediments (blocking issues) that arise during the sprint. Scrum masters are part coach, part team member, and part cheerleader.

Scrum Team

These are the individuals that actually build the product. The team owns the engineering of the product, and the quality that goes with it.

Boer also explains the unique terminology in the Scrum process beginning with "Product Backlog," which doesn't have the negative connotation of a logjam on unfinished business but is rather "a prioritized list of value the team can deliver." So it's really more of a to-do list. The Product Owner is the custodian of the Product Backlog and is responsible for prioritizing and reprioritizing items, and making additions and changes to the list of things the Scrum team is developing.

There is also a Sprint Backlog where the team decides what items it will be able to complete in a given Sprint.

"Often, each item on the Sprint Backlog is broken down into tasks," Boer explains. "Once all members agree the Sprint Backlog is achievable, the Sprint starts."

This seems a good way to avoid the common application development bugaboo: over promising and under delivering.

To determine the status of the tasks in the Sprint Backlog, Boer recommends that the team hold a Daily Scrum, a 15-minute meeting where team members can discuss how work is progressing:

To aid the Daily Scrum, teams often review two artifacts:

The Task Board

Lists each backlog item the team is working on, broken down into the tasks required to complete it. Tasks are placed in To Do, In Progress, and Done columns based on their status. It provides a visual way of tracking progress for each backlog item.

The Sprint Burndown

A graph that plots the daily total of remaining work. Remaining work is typically in hours. It provides a visual way of showing whether the team is "on track" to complete all the work by the end of the Sprint.

At the completion of a Sprint, the team holds a Sprint Review where they meet with the Product Owner and other stakeholders to show off the product, known as an "Increment" or a "Potentially Shippable Increment" if the owner approves it. There is also a Sprint Retrospective where team members go over what went right, what went wrong and how to improve things for the next Sprint.

Since the Increment may not be a final product, the Scrum Team will likely go on to do another Sprint. This is the heart of the agile process which Boer characterizes as "Repeat. Learn. Improve."

Posted by Lafe Low on 01/19/20180 comments

As you develop new apps, your data model changes by necessity. Entity Framework Core migrations—or EF Core migrations as the cool kids call them—help you keep your data model in sync with the database. It helps your apps run smoothly as they evolve and as you develop new apps that might draw upon the same database. Here are a few tutorials we found that can help ease the process:

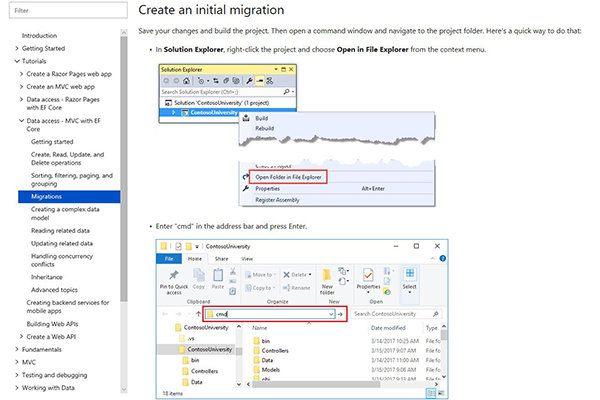

You'd expect our buddies at Microsoft to present some compelling tutorials on complex and semi-complex technical topics like this. And indeed they do. This tutorial—fourth in a series of 10—takes us through using EF core migrations to manage data model changes.

They open with,

"When you develop a new application, your data model changes frequently, and each time the model changes, it gets out of sync with the database. You started these tutorials by configuring the Entity Framework to create the database if it doesn't exist. Then each time you change the data model—add, remove, or change entity classes or change your DbContext class—you can delete the database and EF creates a new one that matches the model and seeds it with test data.

This method of keeping the database in sync with the data model works well until you deploy the application to production. When the application is running in production, it is usually storing data that you want to keep, and you don't want to lose everything each time you make a change such as adding a new column. The EF Core Migrations feature solves this problem by enabling EF to update the database schema instead of creating a new database."

Veteran

Visual Studio Live! presenter Ben Day maintains his own

blog, on which he has some excellent step-by-step tutorials and walkthroughs. To help ease some of the confusion around EF migrations, he has—as you would expect—a perfectly well-organized and easy to understand walkthrough, complete with section headers to break up the sections, screen shots, and code samples.

It's a nice straightforward entry. "Here's a walk-through on how to create a new solution that uses an ASP.NET Core project, an MSTest unit test project, and Entity Framework Core 1.1 (EFCore1.1). I'm not sure if you’ve found this to be the case but I've been struggling with the documentation for a few days trying to figure out how to get EF Core Migrations working with the "dotnet ef" command line. This sample will show you how to do all of this with the current version of the .NET Core SDK. For this walk-through I'm going to use the solution name of "Benday.EfCore." We'll start by using my "create" script from that blog post."

Ben's walkthrough also lets you simply go directly to the code if you'd like. He provides a link to do so before he gets into his walkthrough.

This is another thoughtfully and cleanly organized tutorial. It breaks up each section with section headers (as represented in the mini table of contents), and show code samples in tinted boxes along the way. This tutorial begins with a roadmap of what you'll be learning.

"The following topics are covered in this document:

- Creating a Migration

- Removing a Migration

- Applying a Migration

- Reversing a Migration

- Applying a Migration to a Remote Database

- Executing Custom SQL

- Targeting Multiple Providers

When developing applications, the model is likely to change often as new requirements come to light. The database needs to be kept in sync with the model. The migrations feature enables you to make changes to your model and then propagate those changes to your database schema. Migrations are enabled by default in EF Core. They are managed by executing commands. If you have Visual Studio, you can use the Package Manager Console (PMC) to manage migrations. Alternatively, you can use a command line tool to execute Entity Framework CLI commands to create a migration."

Posted by Lafe Low on 12/21/20170 comments

As we wrap up 2017, we hope it was a good year for you. It was a good year for us—great events, new technologies, lots going on. Looking ahead to 2018, we're filling out the calendar for the Visual Studio Live! events for next year—our 25th year of bringing you Visual Studio Live! We have the dates and locations set, so you can start making tentative plans. We even have the agenda for the Las Vegas event in March posted, so you can start crafting your schedule.

As always, every Visual Studio Live! event brings you the latest cutting edge sessions exploring how you can get the most out of the Microsoft development technology stack. The sessions and our expert speakers dive deep into Visual Studio 2017, ASP.NET Core, Angular, Xamarin, R, mobile, and other technologies. Whether you opt for the full day hands-on labs or the laser sharp focus of the individual sessions, our cadre of experts is ready to help you become a better coder.

The first event of the year is once again Visual Studio Live! Las Vegas, March 11-16, 2018 Las Vegas, NV. This one will be held at Bally’s Hotel and Casino. And we already have the agenda set and posted. You can check it out here.

The rest of next year’s events are on the following dates and in the following locations:

- Visual Studio Live! Austin, April 30-May 4, 2018, Austin, TX. We'll be holding this one in the Hyatt Regency in Austin.

- Visual Studio Live! Boston, June 10-14, 2018, Cambridge, MA. This one will once again be at the Hyatt Regency in Cambridge overlooking the historic and scenic Charles River.

- Visual Studio Live! Redmond, August 13-17, 2018, Redmond, WA. Come join your colleagues on the Microsoft campus. Rub elbows with Microsoft developers and employees and get a feel for campus life.

And stay tuned for more details as we finalize the websites and agendas for these events:

- Visual Studio Live! Chicago, Sept. 17-20, 2018, Chicago, IL

- Visual Studio Live! San Diego, Oct. 7-11, 2018, San Diego, CA

- Visual Studio Live! Orlando, Dec. 2-7, 2018, Orlando, FL

This last event of the year is once again presented as part of Live! 360, which also includes TechMentor, Office and SharePoint Live!, SQL Server Live!, and Modern Apps Live! And as always, you can craft your own agenda, a little Visual Studio Live! with some SQL Server Live! and Modern Apps Live! mixed in. Whatever you like—your ticket in is your key to the kingdom. You can pick and choose from the five conferences and get just what you want.

Check out the Visual Studio Live! site here for the latest as we finalize plans and agendas for our 25th year events.

Posted by Lafe Low on 12/14/20170 comments

Howdy readers, Lafe here. About once a month, my partner in crime, Rich Seeley, has been doing technical takeovers of this blog. Here's his latest post, which looks at how your Visual Studio wallpaper can help your productivity.

To some developers this may seem like a silly question. Who cares about the wallpaper of all things when you're on a deadline project that is not exactly going swimmingly and it seems like everybody in the world is breathing down your neck?

Good point.

In that state of mind, you may not even have noticed the drab, dare we say uninspiring, wallpaper like this.

It's okay. But you might be forgiven if it reminds you of the tile on your dorm room floor or that cheap hotel room where you stayed when … well, let’s not get into that. Anyway, it doesn't seem to say: "Let's write some beautiful code today."

Or if you aren't visually oriented, you might be just as happy if the wallpaper looks like nothing special.

This wallpaper seems to say: "Don’t distract me. I'm coding."

However, if you are working in a windowless cube or inhabit a home office set up in the 1960's era fallout shelter under your grandfather's house, you might enjoy a peek at the outside world. This Visual Studio Community Wallpapers site does offer some eye candy.

Now you could glance at this and maybe think: "If I ever get this [expletive deleted] project done, maybe I can take a vacation to a place where there are calm waters and beautiful architecture." So maybe the wallpaper helps you set a goal for better work-life balance.

This brings us to the question of motivation.

There are authors who believe your work environment, which broadly speaking could include the look and feel of Visual Studio, may influence your productivity.

Jacob Morgan, author of The Future of Work, says corporations are beginning to realize that the employee experience is just as important to business success as the much touted customer experience. This includes how the employee interacts with the work environment with a new emphasis on "designing beautiful workplaces," Morgan notes in one of a series of articles he has written for Forbes.

So if you spend your days inside the Visual Studio environment maybe wallpaper could help make it a beautiful workspace.

Could it make you more productive?

Kayla Matthews has given that some thought in her blog.

"Countless apps and software suites can help you maximize your productivity," she writes. "But have you thought about switching your desktop background to a wallpaper that boosts productivity?"

Matthews doesn't say wallpaper can work wonders. She's from the "every little bit helps" school of motivation.

And she has some cool ideas. You might draw strength from looking at a hero like Albert Einstein (available at

Wallpaper Abyss):

You can also get wallpaper with inspirational quotes:

- "If we did all the things we are capable of, we would literally astound ourselves.” ~ Thomas A. Edison

- "Everything is theoretically impossible, until it is done." ~ Robert Heinlein

- "Research is what I'm doing when I don't know what I'm doing." ~ Wernher von Braun

There's more where these came from at

https://www.brainyquote.com.

Another option is the aforementioned travel poster wallpaper.

"Although you may associate long-awaited beach vacations and global getaways with a lack of productivity," Mathews writes, "researchers have found taking trips makes people more productive."

If you have your doubts, read 4 Ways Going on Vacation Increases Your Productivity by Jon Levy, behavior scientist, on Inc. magazine's website.

So maybe wallpaper postcards of Maui or Miami or Montana would help you boost your productivity.

Mathews also previews wallpapers that help set down-to-earth goals including enhanced fitness, saving money, eating better, and breaking unhealthy habits.

If you feel creative and can't find a wallpaper to download that fits your mood or the mood you’d like to be in while coding, Adobe offers a site for you: Make custom wallpapers easily with Adobe Spark. No design skills needed.

The main thing is to find or create wallpaper that works for you and hopefully makes you more productive in your work or makes it a little more fun.

Fun in your work environment: What a concept.

Posted by Lafe Low on 10/19/20170 comments

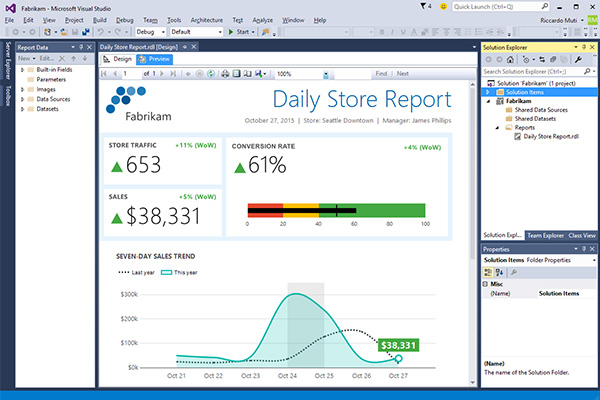

Howdy readers, Lafe here. About once a month, my partner in crime, Rich Seeley, has been doing technical takeovers of this blog. Here's his latest post, which looks at some resources for Visual Studio Reporting Services.

If you're beginning your Visual Studio career or perhaps even if you are a veteran user, generating sales and other business reports may seem like a challenge.

Reporting is important as most business apps are going to need to get data into a traditional Excel spreadsheet or some other format for business users, managers and executives.

It has not been easy to find information by Googling for help with Visual Studio 2017 basic reporting.

Until now.

Fortunately, this month Microsoft has offered new guidance in two blogs that will help you with reporting.

Microsoft Build Engine for Reporting Services

A Sept. 25

blog written by Brad Syputa, a software engineer on Microsoft's SQL Server BI team, announced availability of "Microsoft Build Engine (MSBuild) support for building and deploying Reporting Services projects with SQL Server Data Tools for Visual Studio 2015 or Reporting Services Projects for Visual Studio 2017."

"By invoking msbuild.exe on your project or solution file, you can orchestrate and build products in environments where Visual Studio isn’t installed," Syputa writes. "This is key for developers looking to automate deployment of Reporting Services projects outside of Visual Studio, which can streamline the process of moving between development and production environments.

Visual Studio 2015 users can download SQL Server Data Tools here.

Visual Studio 2017 users can find out about Reporting Services Projects here.

"Once you install the latest update, depending on which version of Visual Studio you're using, the new files enabling MSBuild for your projects will be installed in different folder paths," Syputa writes.

Visual Studio 2015 users need to look for a standalone folder called "MSBuild" under Program Files (x86).

For Visual Studio 2017, there is a "nested folder in your Visual Studio folder hierarchy."

Syputa has provided screenshots in the blog to help you visualize where to find the tool in your environment.

"If you see these new folders, this means you'll now see MSBuild support as an option for Build, Clean, Compile and Deploy actions in any new report project you create," Syputa writes. "For existing projects, you will be prompted to do a one-time upgrade the first time you open it after installing the latest updates. The upgrade will automatically backup your project first, then proceed. Once it's completed, you’ll have the new MSBuild functionality available to you."

Once you have that sorted out, there are walkthroughs for using MSBuild for Visual Studio 2015 here and Visual Studio 2017 here.

Keep an eye on those sites as more walkthroughs are promised in the coming weeks.

SQL Reporting Services

For everyone working with SQL,

Create and manage Reporting Services, a Microsoft blog published Sept. 5, 2017, explains how to use SQL Reporting Services, Visual Studio Team Services (VSTS) and Team Foundation Services (TFS) for creating reports. It takes just four minutes to read the whole blog post according to the authors.

Following the directions in the article, including helpful screenshots, you can learn how to:

- Allow users to update the report without granting them read access to the databases.

- Share your reports in Team Explorer under the Reports folder.

- Support subscriptions to reports that can be sent daily over email.

- Manage the properties of your reports so that they return results faster and use fewer server resources.

- Use Transact-SQL queries to retrieve the data for your reports.

There are several reporting tools covered in the Microsoft blog starting with Report Builder 2.0, which can be downloaded here. This is an "intuitive environment for authoring reports." Intuitive apparently means you shouldn't have to read a blog or any other documentation to figure out how to use it. Report Builder is designed with Microsoft Office apps, such as Excel in mind, so business users can work with the tools they already know. For the Visual Studio developer, Report Builder 2.0, you can "work with data, define a layout, preview a report, and publish a report to a report server or a SharePoint site."

There is also a wizard for creating tables or charts, in addition to query builders, and an expression editor, which provides capabilities for HTML editing and web design. That should come in handy when organizations want reports online.

If in addition to Visual Studio you have SQL Server Business Intelligence Development Studio, you can use Microsoft Report Designer GUI tool to create "full-featured Reporting Services reports" without knowing Report Definition Language (RDL).

Microsoft also offers a series of step-by-step tutorials showing you how to create Visual Studio reports with SQL that would be helpful for beginners, starting with Lesson 1: Creating a Report Server Project (Reporting Services).

Third-Party Tools

Beyond what Microsoft offers, there are also third-party tools to help with Visual Studio reporting.

This month GrapeCity Developer Solutions began providing .NET developers with a new spreadsheet server API for creating and working with Microsoft Excel-compatible spreadsheets, reports David Ramel, editor in chief of Visual Studio Magazine.

"Called Spread.Services, the new offering simplifies the creation, conversion and sharing of spreadsheets across Windows, Android and Mono platforms, while supporting .NET Core 2.0 and targeting .NET Standard 1.4 for multi-platform support," Ramel writes in a recent Visual Studio Magazine article, GrapeCity Offers Spreadsheet Server API Across .NET Platforms.

GrapeCity, a Microsoft Visual Studio Partner, says developers can "easily implement code to process, collate, and consolidate data from large sets of spreadsheet documents, including reports, itineraries, and financial analyses."

The Spread.Services interface-based API uses Excel's document object model and with it GrapeCity says the tools can do some pretty nifty things: “Advanced calculations become lightning-fast with more than 450 built-in Excel-compatible formula functions. Rich data entry forms are easily created using data validation rules and formulas. Spread.Services also enhances security -- users can hide formulas, sheets, and other objects from the end user and set locked and protected ranges and sheets with password protection."

Old reliable Crystal Reports has also been available with Visual Studio for a long time. The latest information on downloads for Visual Studio 2010 through 2017 is available on this SAP Community wiki.

Posted by Lafe Low on 09/28/20170 comments

We hope you didn't miss the recent Visual Studio Live! event held on the Microsoft campus in Redmond, but if you did, you're in luck. There were several sessions recorded that are now available to watch on Microsoft's Channel 9. Here's a list of what's available, and a brief synopsis of each:

Getting Started with Aurelia

Brian Noyes, CTO and Co-founder, Solliance, and veteran Visual Studio Live! presenter, led a rich and informative session on starting to work with Aurelia. Aurelia is a relatively new Single Page Application framework. Its primary benefits include a rich data binding system, dependency injection and modules, and a powerful routing and navigation system.

Bots are the New Apps: Building Bots with ASP.NET WebAPI & Language Understanding

Microsoft's own Senior Technical Evangelist Nick Landry's session covered the use of bots. These are rapidly becoming a more common tool to interact with a service or application as a web site or mobile experience. Nick's session showed how to build and connect intelligent bots to interact via text/sms, Skype, Slack, Facebook, e-mail and other popular services.

SOLID – The Five Commandments of Good Software

Another Microsoft presenter Chris Klug, Senior Software Developer, led a session that focused on the SOLID principles. These are essentially the five commandments of the software world. They are the foundation of building good software. He worked through each of the principles, explained what they mean and how to put them into practice.

Distributed Architecture Microservices and Messaging

Visual Studio Live! co-chair CTO, Magenic, Rockford Lhotka, presented a session on using distributed architecture. He explained how building distributed systems promotes reliability, scalability, deployment flexibility, and decoupling disparate services and apps. He also covered creating microservices, apps, and the messaging patterns.

Microsoft's Open Source Developer Journey

This keynote session, presented by Microsoft's Scott Hanselman, Principal Community Architect for Web Platform and Tools, took attendees through the process of Microsoft's evolution into an open source organization. He covered how open source .NET fits into the larger open source world, providing listeners with historical context and several lots of technical demos of .NET Core and ASP.NET Core.

Getting to the Core of .NET Core

Adam Tuliper, Principal Software Engineer, DX for Microsoft, led a session that dove deep into .NET Core. He explained exactly what .NET Core is and what it can do. He also explained a lot about the architecture and runtime, including how it works across different platforms like Windows, OSX, and Linux.

These sessions are a great preview of the content you'll see at the last 3 Visual Studio Live! events of the year:

Chicago

September 18-21, Chicago Marriott Downtown

Anaheim

October 16-19, Hyatt Regency Orange County

Orlando – part of Live! 360

November 12-17, Loews Royal Pacific Resort

Posted by Lafe Low on 09/11/20170 comments

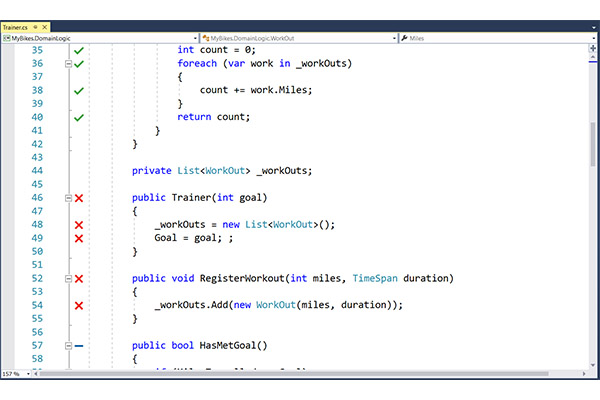

Howdy readers, Lafe here. About once a month, my partner in crime, Rich Seeley, has been doing technical takeovers of this blog. Here's his latest post, which looks at the Live Unit Testing feature introduced in Visual Studio 2017.

Write the code. Stop. Test the code. Try to figure out where the bug is. Try out a fix. Test again. Maybe it works and maybe it doesn't. Maybe it will get through QA or maybe it won't.

This is what Joe Morris, senior program manager, Visual Studio and .NET Team, calls old school testing. None dare call it drudgery.

But for developers working with Visual Studio 2017 Enterprise Edition old school testing methods have been replaced with a cool new feature called Live Unit Testing. It allows you to run testing as you code, so you can immediately see lines of code that aren’t passing as well as lines that aren't being covered by any test. You can fix the failing code on the fly and know immediately if it passes. You can also quickly apply as test to uncovered code and see if it passes.

"It will tremendously improve your coding productivity and quality of code by showing the unit test and code coverage live in the editor while you're coding," Morris explains in a short video demoing Live Unit Testing. Usually words like "tremendously improve" are marketing hype but looking at Live Unit Testing in action it appears to be the real deal.

To contrast Live Unit Testing with old school testing, Morris shows us what he used go through to run tests in the pre-Visual Studio 2017 world.

The test had to be run "manually" and they could then be viewed in the Test Explorer window. The trouble was Test Explorer didn't tell you very much. It was the equivalent of a GPS system that would leave you on the edge of a forty-square mile area wherein the restaurant you were looking for might be located.

"I would look at the failed test," Morris said. "It tells me that the test failed in line 43. But that is not much useful info. To see more data on the failed test I would typically run Code Coverage Results for failed test. It tells me what blocks are covered and not covered. It still doesn't show any visualization in the code editor. I can click on show code coverage in the blocks in the editor. Even then it is not easy to figure out which one is covered and not covered or failing."

By contrast, Live Unit Testing is...well...live and quick and rather than looking for a needle in a haystack it pinpoints where the problems are.

So forget Test Explorer. To enable Live Unit Test you go to the Test menu where you select Live Unit Testing from a drop down menu and click on Start.

Within a few seconds you will see glyphs showing up in the left hand column of your code editor. Green checks on a line indicate tests covered by Unit Testing are passing. Red Xs indicate a line is failing the covered unit test. Blue dashes indicate no Unit Test coverage.

So it’s a matter of stopping and making fixes on the red and being good to go on the green. Meanwhile blue dashes remind you to find a test to make sure the blue line is okay.

Or as Microsoft describes it: "This will gently remind you to write unit tests as you are making bug fixes or adding features."

With the lines marked with red glyphs, you are able to zero in using a popup that shows specifically which test it is passing or failing. Once you know what test is failing, you are pretty close to understanding what needs to be fixed. And when you make the fix to the line the test results show up almost instantaneously.

"I identify the bug in my code," Morris explains. "I need to add another line of code to fix the code. As soon as I make that change the state of the code changes from red crosses to green checks indicating my code is passing the unit test now."

Where there is a blue dash showing that line of code is covered by zero tests, the fix is also swift. By going to the Unit Test window, you can add a test. The test runs and a green check indicates it passed.

Very quickly everything goes to green. By keeping Live Unit Test running as you code, you can make sure your code passed and Unit Test coverage is 100 percent.

"That's very cool," Morris says. "I can see that all my lines of code are covered by a test and they all have a green check."

The feature works with C# and VB projects for .NET and supports the MSTest, xUnit and NUnit test frameworks.

"It uses VB and C# compilers to instrument the code at compile time," Microsoft explains in a Visual Studio blog covering the new feature. "Next, it runs unit tests on the instrumented code to generate data which it analyzes to understand which tests are covering which lines of code. It then uses this data to run just those tests that were impacted by the given edit providing immediate feedback on the results in the editor itself. As more edits are made or more tests are added or removed, it continuously updates the data which is used to identify the impacted tests."

Posted by Lafe Low on 09/07/20170 comments