One of the cool features in ASP.NET Core is support for the Dependency Injection software design pattern.

For developers working in Object-oriented programming and web services, dependency injection provides what Microsoft defines in documentation as valuable best practices to help:

- Design services to use dependency injection to obtain their dependencies.

- Avoid stateful, static method calls (a practice known as static cling).

- Avoid direct instantiation of dependent classes within services. Direct instantiation couples the code to a particular implementation.

If you are not familiar with the concept Dependency Injection, Wikipedia provides this basic definition:

“… dependency injection is a technique whereby one object (or static method) supplies the dependencies of another object. A dependency is an object that can be used [as] (a service). An injection is the passing of a dependency to a dependent object (a client) that would use it. The service is made part of the client's state. Passing the service to the client, rather than allowing a client to build or find the service, is the fundamental requirement of the pattern.

In Object-oriented programming, web services and Software Oriented Architecture (SOA), the goal is to use objects as building blocks of an application in a way that allows you to make changes by adding and removing objects rather than writing extensive code. As Wikipedia explains: “The intent behind dependency injection is to decouple objects to the extent that no client code has to be changed simply because an object it depends on needs to be changed to a different one.”

In Microsoft pages outlining the fundamentals of dependency injection, it offers a simple definition: “A dependency is any object that another object requires.”

Diving into the Documentation

For developers who specifically want to start using dependency injection with ASP.NET Core, Microsoft offers step-by-step documentation with code samples and simple demonstration apps.

On Microsoft’s ASP.NET Blog, Jeffrey T. Fritz, Microsoft .NET Program Manager explains the basics of documentationDependency Injection in ASP.NET Core, noting: “With ASP.NET Core, dependency injection is a fundamental tenet of the framework. All classes instantiated by the framework are done so through the container service that is maintained by the framework in a container and configured by default in the Startup/ConfigureServices method.”

Fritz reassures developers that the framework makes adopting dependency injection very straightforward: “ASP.NET Core makes it easy to get started with this design pattern by shipping a container that you can use with your application. Configure your application’s controllers, views, and other classes that are instantiated by the framework with parameters on the constructor method to have those types automatically created and passed in to your class.”

The blog contains code samples to illustrate how the design pattern works and points developers to Microsoft ASP.NET Dependency Injection documentation. The authors provide loads of code samples and offer the following recommendations to developers working with dependency injection:

- Avoid storing data and configuration directly in the service container. For example, a user's shopping cart shouldn't typically be added to the service container. Configuration should use the options pattern. Similarly, avoid "data holder" objects that only exist to allow access to some other object. It's better to request the actual item via dependency injection, if possible.

- Avoid static access to services

- Avoid using the service locator pattern (for example, IServiceProvider.GetService).

- Avoid static access to HttpContext (for example, IHttpContextAccessor.HttpContext).

That is just a sample of the detailed information available on that site.

Beyond the Basics

Once you have the basics down, Steve Smith, Microsoft MVP since 2002 and a founding member of the ASPInsiders, an external advisory group for the ASP.NET product team, provides insight on the MSDN site for Writing Clean Code in ASP.NET Core with Dependency Injection.

He notes that dependency injection is “an increasingly common technique in .NET development, because of the decoupling it affords to applications that employ it.”

“ASP.NET Core not only supports DI,” Smith writes, “it also includes a DI container—also referred to as an Inversion of Control (IoC) container or a services container. Every ASP.NET Core app configures its dependencies using this container in the Startup class’s ConfigureServices method. This container provides the basic support required, but it can be replaced with a custom implementation if desired. What’s more, EF Core also has built-in support for DI, so configuring it within an ASP.NET Core application is as simple as calling an extension method.”

More about ASP.NET Core

If you are looking for an enthusiastic endorsement and overview of ASP.NET Core, don’t miss Philip Japikse's Q&A on Hands-On with ASP.NET Core and EF Core in Visual Studio Magazine.

“I think ASP.NET Core is the biggest game changer in the history of Web development using the Microsoft stack,” asserts Japikse, Developer, Coach, Author, Teacher, Microsoft MVP and Visual Studio Live! presenter.

“Microsoft developers are no longer restricted to running their applications on Windows Server, but can essentially run anywhere,” he explains. “This brings in a myriad of options for deployment targets, including popular containers (like Docker) and lower cost (than Windows) Linux distros. This also opens up .NET as a viable option in those organizations that require development tools to run cross platform. In the past, .NET developers were shut out from those opportunities since Java was the only large-scale enterprise toolset that could ‘check the box’ regarding running cross platform.”

Posted by Richard Seeley on 08/09/20180 comments

The .NET bandwagon is rolling and this may be the time to jump onboard and get up to speed on all things .NET.

There's so much to learn about .NET Standard, .NET Core, .NET Framework, Mono, and Xamarin. Plus you need to look to the future of C# and Roslyn, so you know how everything will come together with Visual Studio and Visual Studio Code.

As an article in Visual Studio Magazine noted recently .Net Core, the open source, cross-platform alternative to .NET Framework, is increasingly becoming the runtime of choice for C# coders, according to a survey of developers. Released two years ago, usage has increased since the advent of .NET Core. 2.0 one year ago.

"Microsoft advises that .NET Core be used for certain specific projects -- including highly scalable Web apps, Web apps on Linux or self-contained deployments -- while .NET Framework remains the best option for Windows-only projects," writes David Ramel, editor of Visual Studio Magazine.

For C# programmers using Visual Studio, Microsoft provides a simple tutorial, Build a C# Hello World application with .NET Core in Visual Studio 2017. Following the online instructions and screenshots, it looks like a pretty straightforward way to get started even though "Hello World" is not the equivalent of a mobile banking app.

For .NET overall, the developer community is growing by leaps and bounds since it was open sourced in 2015.

.NET Everywhere for Everyone

Another Visual Studio Magazine article, James Montemagno on .NET 'Everywhere for Everyone' backed up what seems at first to be an extravagant claim.

Montemagno, who serves as Principal Program Manager in Microsoft's Mobile Developer Tools group, gave a keynote speech at the Boston edition of the Visual Studio Live! Conference this past month where he called .NET "a vast, open, constantly growing, and ever-evolving ecosystem." He noted that millions of developers are leveraging .NET to build applications for virtually any platform.

"This is the best time to be a .NET developer," he told the audience.

The .NET ecosystems needs new developers, Montemagno told Visual Studio Magazine, and Microsoft wants to nurture a growing community with easily accessible educational resources.

"It has never been easier to get started with .NET and C# or any of the other languages," Montemagno said.

He suggested going to Microsoft's .NET Web site where "interactive online learning experiences" are available for free to anyone interested. "Those are the types of experiences that are going to enable the next generation of developers," he said, "right there in the browser, ready to go."

While C# is getting all the coverage, .NET developers can work in almost any known programming language. On a Microsoft Channel 9 YouTube video, The Future of .NET, a panel of .NET aficionados noted that Fortran and COBAL code is being ported to .NET as banks and other businesses seek to move from mainframes to more modern metal.

ASP.NET Core Security

Security being top of mind these days, security expert Brock Allen will present a session titled "Modern Security Architecture for ASP.NET Core," at the September 17-20 Visual Studio Live! conference in Chicago. It’s designed to help developers get up to speed on the main components in ASP.NET Core for securing Web applications and Web APIs when using the open-source and cross-platform framework for building cloud based internet connected applications. In Top Tips for Securing ASP.NET Core, an interview this month in Visual Studio Magazine, Allen gives a preview of his upcoming session explaining the security architecture that is important for those working with the framework.

Visual Studio Live! Chicago will feature tracks with topics including:

- What's new in C#

- Sharing code between full .NET, .NET Core, and Mono with .NET Standard

- Roslyn

Additional .NET related tools

Roslyn is a code name for the .NET Compiler Platform, and according to a Wikipedia article, may be the namesake of Roslyn, WA, or Roslyn, the Alaska town where the Northern Exposure TV series was set. Whatever the inspiration for the code name, it is "a set of open-source compilers and code analysis APIs for C# and Visual Basic .NET languages." It is designed to end the frustration developers have faced with “black box” compilers by exposing the APIs.

Xamarin tools share the C# codebase and are used "to write native Android, iOS, and Windows apps with native user interfaces and share code across multiple platforms, including Windows and macOS," according to a Wikipedia article.

Mono is an open-source project piloted by Xamarin, a subsidiary of Microsoft, and the .NET Foundation. It is designed for running .NET applications cross-platform including "Android, most Linux distributions, BSD, macOS, Windows, Solaris, and even some game consoles such as PlayStation 3, Wii, and Xbox 360," according to Wikipedia.

Posted by Richard Seeley on 07/13/20180 comments

“In the beginner’s mind there are many possibilities, but in the expert’s there are few.”

― Shunryu Suzuki, Zen Mind, Beginner's Mind

If you’re a beginner in DevOps and you can’t figure out how to learn it on your own, don’t worry. DevOps is not about doing things on your own. It’s a team sport. Thinking of doing DevOps on your own is like thinking you could play football on your own. What are you going to do? Throw the football up in the air, run under it and catch it?

One of the keys to learning DevOps is to think about application development in terms of teamwork.

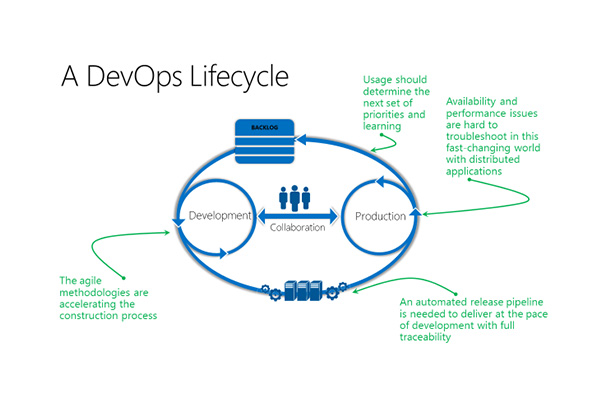

Here’s how Abel Wang, in a keynote at a recent VSLive! conference, explained how Microsoft's DevOps journey evolved when the company made the decision to give up its traditional waterfall approach to development.

He began with Microsoft’s definition of DevOps as "the union of people, process, and products to enable the continuous delivery of value to our end users," according to a report on VisualStudiomagazine.com.

A Cultural Change

It was a cultural change as much if not more than a technology change. My colleague John K. Waters, explained it this way in his report on Wang’s talk.

The new team structure now recognizes only two roles: program manager and engineer. The program manager is roughly the equivalent of a product owner in the Scrum process. Everyone else is an engineer, with no distinctions between developers and testers. Also, restructured: the teams themselves, which had operated in segregated environments: UI developers worked on the UI layer, for example, while database people worked on the database layer. The restructured teams now own the entire feature set from beginning to end, including the UI layer, the data layer, and the database itself, as well as installation, deployment, and quality. Even the workspace was reconfigured: individual offices were replaced by team rooms, where everyone works together, including the program managers.

So if you’re going to begin a career in DevOps, you have to have programming and testing skills, but mostly you have to give up on the idea of the lone coder sitting in a dark concrete block room working on an app. That is not what DevOps is about.

If you are a tester, you’re going to have to learn programming skills you may not have and vice versa if you’re a programmer. No one, least of all Wang, says this is easy.

"Developers traditionally make incredibly bad testers," Wang pointed out in his keynote. "And testers traditionally make very bad coders. So how did we do this? We trained our people and we required them to adapt...."

Considering the talent and brainpower of the average Microsoft employee, it is a gauge of how difficult this was that the attrition rate on Wang’s team was 20 percent. But on the positive side 80 percent adapted to being engineers who could go with the DevOps flow.

"It was incredibly painful," Wang recalled. "We suffered a lot of attrition from all sides -- management, developers, testers -- because the new way of looking at things and doing things was very different from the way we did things before. And we all know no one really likes change. But if there's one constant in our industry it's change."

The IBM Way

IBM has its own take on DevOps and even offers a free eBook, DevOps for Dummies, written by Sanjeev Sharma and Bernie Coyne. Sharma also has a series of video blogs including, DevOps: Where to Start on YouTube. This video is designed to provide an overview for folks who basically know what DevOps is but want to actually implement it in their organizations.

The IBM approach to the team concept in DevOps is different from how Wang and his colleagues at Microsoft do it. As Sharma explains Big Blue’s approach, the team members do have defined roles:

- Developer

- Build Engineer

- QA Team

- Integration Tester

- User Tester

- Operations

He considers the team members with different roles as stakeholders in the overall project who need to collaborate as software moves, for instance, from developers to tester to operations, which is basically the DevOps lifecycle. He acknowledges that there are going to be pain points, for example, testing becoming a bottleneck.

“You look at how you can address those,” Sharma explains. “How you can improve communications and build trust between these teams.”

As can be seen in the different approaches of two giants of the computing world, DevOps, which has only been around as a concept since 2007, is not a paradigm with hard and fast rules that can be employed by everybody everywhere.

For the individual going to work on a DevOps team, where to start may depend on whether your organization is taking an egalitarian approach where you may have to do programming, testing and perhaps go get takeout pizza. So individual skills required will vary. In a Microsoft type system, you may need to become a generalist with coding and testing skills. Following the IBM path, you might specialize in an area such as Integration Testing.

If no one in your organization has DevOps background or skills there are lots of vendors who are willing to help you. But since there are different approaches to DevOps it will be important to make sure the selected vendor’s practices jive with your organization’s culture.

Of course, one place to acquire skills is at VSLive! That’s where you can tap the brainpower of experts like Microsoft’s Abel Wang.

Posted by Richard Seeley on 06/07/20180 comments

This blog started to answer the question: How do you get Agile certified?

But that turns out to be the easy question. A Google search turns up plenty of universities and training organizations willing to teach you so you can get certified for a price.

The Project Management Institute offers an Agile Certified Practitioner (ACP) certification program.

Villanova University offers education and certification programs including Certificate in Agile Management, which it states “is the only comprehensive Agile program offered 100% online by a top-tier university.” It also offers a Professional Certification in Agile & Scrum (PCAS).

So finding ways to get Agile credentials is a Google search away, but there are other questions including why go after these certificates?

Why Agile training?

Of course, most developers want to keep their skills current so they are in demand for top projects and top organizations. Perhaps the best bottom line answer comes from Mark J. Balbes, Ph.D., the Agile Architect at ADTmag.com. In

Agile and CMMI: Tips from the Trenches, he explained why his company sought Capability Maturity Model Integration (CMMI) certification: “Frankly, it's for the money.” He explains that his “project shop” works on government contracts that increasingly required that credential, so it was a practical business decision.

Developers working in organizations or freelancing in the gig economy will probably understand that level of pragmatism.

Beyond the Business 101 reason for getting Agile certifications, Balbes is an advocate of embracing Agile and getting the training you need.

Thinking differently

In

The Survivor's Guide to Agile, he suggests: “Learn what it means to truly be agile and start thinking about your work in those terms.” To learn to think in Agile terms, you’ll need training. Balbes advocates being aggressive in getting the training you need.

“Agile is not immediately intuitive to most people,” The Agile Architect explains. “It takes training and experience to be able to interpret the Agile Manifesto, the 12 Principles and the various practices. Change management is an important part of every successful agile transformation. While your company should be providing the appropriate training and mentoring, take charge of your own education. Read books. Read blogs. Practice techniques like Test Driven Development on your own.”

A key point that Balbes makes is that while learning about new Agile practices is important, unlearning old practices is equally important.

“This is perhaps the hardest thing to do,” he writes. “You already know techniques to address specific needs and situations. When these situations present themselves, it’s only natural to turn to the techniques you have used successfully in the past. However, many traditional techniques aren't aligned with core agile values.”

Suggested reading

Much of what needs to be unlearned is suggested on the first page of

The Manifesto for Agile Software Development:

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

To make sure you have the philosophy supporting these points, it is good to read the brief but deep 12 Principles behind the Agile Manifesto.

ADT’s Agile Architect also suggests that developers start their Agile journey by learning and practicing Test-Driven Development (TDD), which a detailed Wikipedia article defines as “a software development process that relies on the repetition of a very short development cycle: requirements are turned into very specific test cases, then the software is improved to pass the new tests, only. This is opposed to software development that allows software to be added that is not proven to meet requirements.”

Visual Studio resources

For Visual Studio developers, Microsoft provides Agile resources:

What is Agile? by Aaron Bjork, principal group program manager at Microsoft, who stresses that Agile is more about how you think about development than any specific set of processes:

“It’s important to understand that Agile is not a thing … you don’t do Agile. Rather, Agile is a mindset. A mindset that drives an approach to software development. There’s not one approach here that works for all situations, rather the term Agile has come to represent a variety of methods and practices that align with the value statements in the manifesto.”

Microsoft is advocating the team concept for its Agile Tools with tooling information for Visual Studio Team Services, which “provides you the tools you need to run your agile team.”

Posted by Richard Seeley on 05/11/20180 comments

Transitioning to Angular 2 from Angular 1 also known as AngularJS, the Google supported open-source web application framework, is not so much about upgrading as moving to something different, according to experts.

The differences between the two frameworks were explained in a Visual Studio Magazine Q&A with Ted Neward, who is director of Developer Relations at Smartsheet.com and well-known as a presenter at Visual Studio Live!.

When asked about the challenges developers faced when moving from Angular 1 to Angular 2, Neward pointed first to the naming conventions that started with AngularJS, the JS reference to its JavaScript frontend for client side web apps.

“The Angular team chose to rename the framework from AngularJS to Angular, meaning that Angular should actually be AngularJS, and Angular 2 should actually be just plain ol' Angular, Neward said. “That raises no end of confusion when speaking about the two frameworks, to be sure.”

Basically, Angular 2 is a complete rewrite of Angular 1, the framework formerly known as AngularJS.

It seems AngularJS had issues.

Ashish Bakshi explained in his What is the difference between AngularJs and Angular 2?, Quora blog that “… around year 2012–14 frameworks like ember.js and react.js (developed by Facebook) popped in with better benchmark results and performance, highlighting the AngularJS drawbacks to the developer community.”

As Bakshi relates this history, “… the angular team decided to create a new framework instead of upgrading AngularJS by incorporating all their hard learned lessons from AngularJS. Hence, Angular 2 was released in Sept. 2016 which is a complete re-write of AngularJS.”

Since the release of Angular 2, there have also been more traditional upgraded versions called Angular 4 and Angular 5. (Angular 3 didn’t make the release cut at some point in its development.) Basically Angular 2/4/5 are all best thought of as what Neward referred to as “plain ol' Angular.”

He said “… the naming change was appropriate, because in many ways Angular was a near-total rewrite of AngularJS, meaning that any AngularJS code will not be silently upgradable to Angular. They kept many of the same concepts, but sought to strengthen those concepts and make them more apparent and clear.”

While there has been resistance among AngularJS/Angular1 coders to adopting the new improved plain ol’ Angular, upgrading seems to be the wisest career choice.

In a blog titled Angular 2: Should You Upgrade? Dave Ceddia offered his reasons including:

- Leaving your software using the old version of a library is Just Not Done.

- Because the features are better

- Because Components are the way of the future and the future is awesome.

- I don’t want to fall behind.

- I don’t want to be stuck holding the bag (and 100k lines of code) when they deprecate the old one.

- If I don’t know the newest thing then no one will hire me.

From a practical I-need-a-job standpoint, the last bullet may be the strongest reason to move into the new Angular world.

Also the component approach in plain ol’ Angular is a big deal.

Neward cited it as his favorite part of the re-written framework: “Component-based design is like object design, but with thicker skin, meaning we treat components in a more opaque fashion, making them more accessible and usable for reuse purposes, among other things. Components were what enabled the Golden Age of GUI Builders (the days of VisualBasic, Delphi, PowerBuilder and the like), and there's solid reasons to imagine that something similar will emerge out of this approach for the web -- which in turn means that developers can deliver useful and powerful applications for the web so much faster than before.”

When Neward was asked for tips that would help developers transition to the new version of Angular, he listed:

- Bookmark the Angular CheatSheet on the Angular Web site.

- Learn and master the TypeScript language.

He also pointed to Visual Studio Live! sessions on Angular.

At the upcoming VSLive! in Austin, Texas, April 30 - May 4, sessions include:

- “Angular 101” with Deborah Kurata, Microsoft MVP and Google Developer Expert

- “Fast Focus: Living Happily Together: Visual Studio and Angular” with Brian Noyes, Solliance CTO, Microsoft Regional Director, MVP

- “Securing Angular Apps” with Brian Noyes

Posted by Richard Seeley on 04/24/20180 comments

Whenever an app is running, you need to know what’s happening behind the scenes. And whenever an app crashes or otherwise has trouble, you definitely want to know what’s going on. Logging is the simple, yet critical, way to ensure your apps are doing what they’re supposed to be doing. Logging helps you detect and identify any issues.

Now that ASP.Net Core is open source, and cross-platform, building in and linking logging functions is more straightforward than ever. The logging API in ASP.Net Core supports for a whole bunch of logging providers. You can send log details to one location or several locations. You can also connect to a third-party logger. And it’s straightforward. Notice I didn’t say easy, but it’s certainly straightforward. Thankfully, as is often the case, there are several blogs ready to help out.

Once again, Microsoft’s extensive knowledge base comes to the rescue. While the Microsoft knowledge base, tutorials, and other blog posts are rarely the only source of helpful tips, it’s almost always the best place to start. This guide to logging in ASP.NET Core begins with a brief definition:

“ASP.NET Core supports a logging API that works with a variety of logging providers. Built-in providers let you send logs to one or more destinations, and you can plug in a third-party logging framework. This article shows how to use the built-in logging API and providers in your code.

How to create logs

To create logs, get an ILogger object from the dependency injection container. This example creates logs with the TodoController class as the category. Categories are explained later in this article.

ASP.NET Core doesn't provide async logger methods because logging should be so fast that it isn't worth the cost of using async. If you're in a situation where that's not true, consider changing the way you log. If your data store is slow, write the log messages to a fast store first, then move them to a slow store later. For example, log to a message queue that's read and persisted to slow storage by another process.

How to add providers

A logging provider takes the messages that you create with an ILogger object and displays or stores them. For example, the Console provider displays messages on the console, and the Azure App Service provider can store them in Azure blob storage. To use a provider, call the provider's Add extension method in Program.cs.”

This knowledge base entry also illustrates each instructional narrative with code snippets. After showing you the basics of how to create a log and add a provider, this tutorial post goes on to cover:

- Sample logging output

- NuGet packages

- Log category

- Log level

- Log event ID

- Log message template

- Logging exceptions

- Log filtering

- Log scopes

- Built-in logging providers

- Third-party logging providers

- Azure log streaming

This tutorial takes you through the whole process of setting up logging for all different versions of ASP.NET Core. It also provides code snippets and listings pf third party loggers you can connect with ASP.NET Core.

“ASP.NET Core framework provides built-in supports for logging. However, we can also use third party logging provider easily in ASP.NET Core application. Before we see how to implement logging in ASP.NET Core application, let's understand the important logging interfaces and classes available in ASP.NET Core framework. The following are built-in interfaces and classes available for logging under Microsoft.Extensions.Logging namespace:

- ILoggingFactory

- ILoggingProvider

- ILogger

- LoggingFactory

ASP.NET Core framework includes built-in LoggerFactory class that implements ILoggerFactory interface. We can use it to add an instance of type ILoggerProvider and to retrieve ILogger instance for the specified category. ASP.NET Core runtime creates an instance of LoggerFactory class and registers it for ILoggerFactory with the built-in IoC container when the application starts. Thus, we can use ILoggerFactory interface anywhere in your application. The IoC container will pass an instance of LoggerFactory to your application wherever it encounters ILoggerFactory type.”

You’ll find this tutorial requires some familiarity with ASP.NET and ASP.NET Core, but does a comprehensive job of walking you through the process.

Blogger Nicolas Blumhardt provides a clean, well-organized tutorial on incorporating Serilog with ASP.NET Core. He also supports his statements with code snippets. “You don’t need anything special to use Serilog with .NET Core: the Serilog package works the same way across the .NET Framework and .NET Core on Windows, macOS and Linux. If you are writing .NET Core web apps with ASP.NET, you’ll want to plug into the Microsoft.Extensions.Logging subsystem to receive events from the framework: unhandled errors, diagnostic info from the request processing pipeline, events from EF, and so on.

In ASP.NET Core 2.0, the default logging provider has gained some features, like its own level control and filtering. This is good thing for the out-of-the-box experience, but if you use Serilog, you won’t want two sets of configuration to keep in sync or two logging pipelines running with subtle differences between them.”

Posted by Lafe Low on 04/13/20180 comments

Hey there everyone, Lafe here. And once again, every month or so my fellow Visual Studio Live! blogger Rich Seeley will present a more technical approach to these blog posts. His latest post covers some of the tools out there that make debugging a bit easier for JavaScript devs.

Every JavaScript programmer would be happy to learn that debugging is being made easier.

Not to get carried away, but improvements are happening. Not at warp speed but not at glacial speeds either.

Tools are available from almost every web browser. Innovations have been made in browsers so JavaScript programmers do not have to rely on old standby console.log for debugging.

Google DevTools

"The first way that most developers learn how to debug is to sprinkle console.log commands throughout their code, in order to infer where the code is going wrong," states the introduction to a tutorial on Google DevTools.

"If you’re still using console.log to find and fix JavaScript issues you’re probably spending more time debugging than you need to," argues Kayce Basques, technical writer for Chrome DevTools, in a YouTube video.

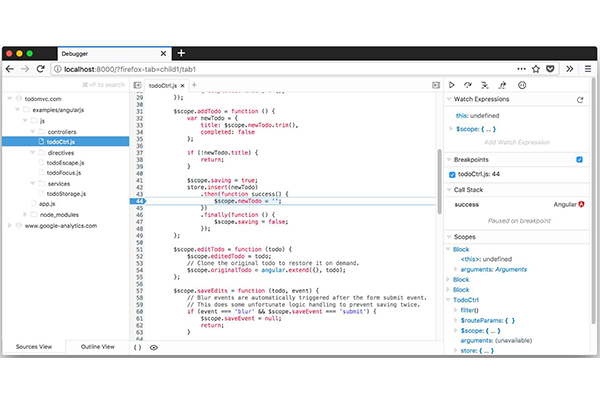

In the tutorial he demonstrates how to set breakpoints in DevTools, which Google says "lets you pause in the middle of a page's execution and step through the code one line at a time. While you're paused you can inspect (and even change) the current values of variables at that point in time. You may find that this workflow helps you debug issues much faster than the console.log method."

Besides the video, Google provides step-by-step text instructions illustrated with screenshots to help you experiment with the Google way of debugging.

Firefox Debugging Tool

Not to be outdone by Google, Firefox, the open-source web browser developed by Mozilla Foundation and its subsidiary, Mozilla Corp., also offers a debugging tool for JavaScript. A video on the Mozilla site demonstrates how the Firefox JavaScript Debugger allows devs to step through their JavaScript code to “examine or modify its state to help track down bugs."

The text accompanying the video about the JavaScript Debugger explains: "You can use it to debug code running locally in Firefox or running remotely, for example on an Android device running Firefox for Android."

The tool is embedded in Firefox, but developers who want to run it as a standalone web application for use on code running in other browsers can find out how to do that at the GitHub repository for the tool.

iPhone and Safari Debugging

At a recent Visual Studio Live! conference, Microsoft's Matthew Soucoup demonstrated pairing his Visual Studio project to an iPhone for live debugging, as reported in a recent Visual Studio magazine article. Several people in the audience asked if you still need a Mac computer for iOS development and the answer was yes, although there appear to be plans to make it all possible from a Windows machine.

In a March 2 Lifewire blog, Scott Orgera explains how you can debug JavaScript using your iPhone depending on the iOS version you have: “If you have an iPhone running an early version of iOS [prior to iOS 6], you can access the Debug Console through Settings > Safari > Developer > Debug Console. Whenever Safari on the iPhone detects CSS, HTML, and JavaScript errors, details of each are displayed in the debugger.” More recent versions of iOS use Web Inspector, Orgera explains. You can activate it with your iPhone but you have to connect to a Mac computer. Orgera’s blog offers instructions with screenshots.

All the Browsers’ Debuggers

All modern browsers have JavaScript debugging capabilities and you can usually access them by simply pressing F12, notes the JavaScript Debugging page on W3Schools.com. This page shows how to use the console.log method and provides instructions beyond F12 for activating JavaScript debugging in:

- Internet Explorer

- Opera

- Chrome

- Firefox

- Safari

Third Party Tools

Beyond what is available for free from your favorite browser maker, there are commercial tools available for debugging JavaScript.

The website for Raygun Inc., based in Seattle, WA, says it has a tool that will “Detect, diagnose and destroy JavaScript errors that are affecting your customers.” The software company offers a 14-day free trial.

Airbrake says its tool allows developers to “Code More, Debug Less With JavaScript Error Tracking.” It is available for a 30-day free trial.

Quick Fixes in Visual Studio Code 1.20

There’s also been news in early 2018 of enhancements to help make JavaScript bug fixes easier.

Take for example the January Visual Studio Code update that makes it easier to quickly fix errors both in JavaScript and TypeScript.

In a Visual Studio magazine article about time-saving features in Microsoft’s updated VS Code 1.20, David Ramel writes: “A new Quick Fix lets developers select a flagged error in source code -- such as a method that has been declared but not yet implemented -- and fix it via options provided in the editor's lightbulb icon. If the same error exists in multiple locations in a file, devs can use a new ‘Fix all in file’ option to address them in one action.”

More help for JavaScript debugging is likely on the horizon. In the meantime, remember, if all else fails press F12.

Posted by Lafe Low on 03/20/20180 comments

Xamarin continues to be the hot toolset for developing cross-platform mobile apps. Xamarin the company was originally founded in 2011 by the engineers who developed Mono. Microsoft swooped in and acquired Xamarin in February, 2016. Now it operates in lock-step with Visual Studio as the preferred dev environment for mobile apps.

As you would expect, Microsoft provides dazzlingly clear step-by-step tutorials on its newly acquired mobile app cross-platform development environment. “Once you've done the steps in Setup and install and Verify your Xamarin environment, this walkthrough shows you how to build a basic Xamarin app (shown below) with native UI layers. With native UI, shared code resides in a portable class library (PCL) and the individual platform projects contain the UI definitions.

You'll do these things to build it:

- Set up your solution

- Write shared data service code

- Design UI for Android

- Design UI for Windows Phone

These steps create a Xamarin solution with native UI that contains a PCL for shared code and two added NuGet packages.

- In Visual Studio, create a new Blank App (Native Portable) solution and name it WeatherApp. You can find this template most easily by entering Native Portable into the search field.

- After clicking OK to create the solution, you’ll have a number of individual projects.

- Add the Newtonsoft.Json and NuGet package to the PCL project, which you’ll use to process information retrieved from a weather data service.”

This example that has you link to a weather app goes on to provide code snippets and excruciatingly detailed step-by-step guidance. This is one of the better spots to check with if you’re launching into the world of Xamarin coding.

And in this corner, you’ll find a refreshingly diverse and encompassing series of Xamarin tutorials. They cover a range of topics; including xamarin.forms, troubleshooting issues, and various cross-platform app development subjects. It’s easy to find what you need. Individual tutorials are presented in a well-organized table of contents. Recent posts include:

Certificate Pinning in Xamarin.Forms, by Alessandro Del Sole: "Securing communications between applications and services is extremely important, and mobile apps are no exception. Even if you use an encrypted channel based on HTTPS, you should never completely trust the identity of the target. For example, an attacker could easily discover the URL your application is pointing to, and put a fake certificate in the middle of the communication between an application and the server, thus intercepting the communication. This is extremely dangerous especially if the application handles sensitive data. In order to avoid this, a technique called certificate pinning can be used to dramatically reduce the risk of this kind of man-in-the-middle attack. This article describes how to implement certificate pinning in Xamarin.Forms, making your mobile apps more secure."

Xamarin.Forms Problem “Could Not Connect to Debugger” Solution, by Munib Ch: "There are some issues traced in the Xamarin 15.2 release. One of the major issued faced by developers is a debugging error.

- The app is deployed

- The app starts on the emulator

- It immediately stops

- You get one or more messages in your output window

This issue is faced by many of the developers who are new in this field. This issue was first faced in Visual Studio 2015. This issue is resolved by ReSharper but it is costly. So I will give you the simple solution for that issue."

Xamarin.Forms—Working with Application Storage, by Delpin Susai Raj: "This article covers working with Application Storage to save app settings on the app in the Xamarin forms App. Xamarin.Forms code runs on multiple platforms -- each of which has its own filesystem. This means that reading and writing files are the most easily done tasks using native file APIs on each platform. Alternatively, embedded resources are also a simpler solution to distribute the data files with an app."

Introduction to Xamarin Forms ReturnType Key, by Sumit Singh Sisodia: "Today, I would like to tell you about Entry Key ReturnType. I'll tell you how to change ReturnKeyType keys like Search, Done, Next etc. In this article I am using Xamarin Forms PORTABLE and XAML.

- In IOS: We have UITextField which has a ReturnKeyType property that you can set to a pre-assigned list.

- In Android: We have EntryEditText which has an ImeOptions property which helps in changing the EditText Key for Keyboard button.

Why Choose Xamarin for Cross Platform Mobile Apps with Visual Studio? by Mukesh Kumar: "Nowadays, everyone wants to use mobile apps rather than websites because they are easy to use with your smartphones. But, do you think mobile apps development is easy? No. Mobile app development is not an easy task due to the availability of different platforms like iOS, Android, and Windows. When we create a website, it is accessible from any device from an Android phone to your laptop. But when you create an App, it is not always accessible from all platforms."

This blog presents another “table of contents” type of listing of various posts covering various Xamarin development topics. As the title says, some are more basic and some more advanced. Shailendra Chauhan is clearly their Xamarin expert, being the author of all these posts. Recent posts include:

“Xamarin with Visual Studio 2017—Build native cross-platform apps:"Along with the launch of Visual Studio 2017, Microsoft has released many fresh and exciting features for mobile developers to develop cross-platform mobile apps using Xamarin. Visual Studio 2017 will help you to build better native cross-platform apps in less time as compared to Visual Studio 2015."

Xamarin Forms Fundamentals: "Xamarin Forms allows developers to build cross-platform mobile app using the common UI pages, layouts, views, controls, and design patterns. At runtime, each Xamarin Forms UI element will be mapped to its native equivalent element in each platform, so that truly native UI can be build and rendered."

Understanding Xamarin Forms—Build Native Cross Platform Mobile Apps: "Xamarin Forms is a part of Xamarin family to build truly native apps for iOS, Android & Windows from a single and shared code base using C#. Xamarin.Forms offers the UI controls/views which you can use to develop UI. These UI controls/view at run-time are converted to platform-specific UI controls.

Understanding Xamarin iOS—Build Native iOS App: Xamarin.iOS is a part of Xamarin family to build native iOS app with C# and Xamarin. Xamarin.iOS offers the same UI controls that are available in Objective-C or swift language and Xcode."

Understanding Xamarin Android—Build Native Android App: "Xamarin.Android is a part of Xamarin family to build native Android app with C# and Xamarin. Xamarin.Android provides the same UI controls as you have in Android with Java.

Understanding Xamarin—A Cross Platform Solution: Xamarin is a free open-source framework to build truly native cross-platform mobile apps using C# .NET for iOS, Android or Windows. It runs on Mono and .NET to build apps with native performance and native UI. Xamarin allows you to develop native apps using C# language and platform specfic tools/SDKs and share the same code across multiple platforms like iOS, Android or Windows."

Xamarin Apps vs. Native Apps vs. Hybrid Apps: Today we are living in the age of mobile phones. Every one using mobile phones for various daily activities like chatting, sharing, shopping, and so on. Mobile apps have changed the way of browsing the web and doing online activities.”

Posted by Lafe Low on 03/15/20180 comments

Microservices design patterns are software design patterns that generates reusable autonomous services. The goal for developers using microservices is to accelerate application releases. By using microservices, developers can deploy each individual microservice independently, if desired.

They have their strengths and drawbacks (thankfully more strengths) and there are many more examples of when they are appropriate to use than not. Here are some listings of top microservices and their use cases we found in the blog-iverse.

This post is a nice fundamental rundown of some of the basic microservice design patterns. It’s a little older, but still germane. Post author Arun Gupta writes, "The main characteristics of a microservices-based application are defined in Microservices, Monoliths, and NoOps. They are functional decomposition or domain-driven design, well-defined interfaces, explicitly published interface, single responsibility principle, and potentially polyglot. Each service is fully autonomous and full-stack. Thus changing a service implementation has no impact to other services as they communicate using well-defined interfaces. There are several advantages of such an application, but it’s not a free lunch and requires a significant effort in NoOps."

Then Gupta lists several microservices design patterns he has found tried and true over the years:

"Aggregator Microservice Design Pattern: The first, and probably the most common, is the aggregator microservice design pattern. In its simplest form, Aggregator would be a simple web page that invokes multiple services to achieve the functionality required by the application.

Proxy Microservice Design Pattern: Proxy microservice design pattern is a variation of Aggregator. In this case, no aggregation needs to happen on the client but a different microservice may be invoked based upon the business need. Just like Aggregator, Proxy can scale independently on X-axis and Z-axis as well. You may like to do this where each individual service need not be exposed to the consumer and should instead go through an interface.

Chained Microservice Design Pattern: Chained microservice design pattern produce a single consolidated response to the request. In this case, the request from the client is received by Service A, which is then communicating with Service B, which in turn may be communicating with Service C. All the services are likely using a synchronous HTTP request/response messaging.

Branch Microservice Design Pattern: Branch microservice design pattern extends Aggregator design pattern and allows simultaneous response processing from two, likely mutually exclusive, chains of microservices. This pattern can also be used to call different chains, or a single chain, based upon the business needs.

Shared Data Microservice Design Pattern: One of the design principles of microservice is autonomy. That means the service is full-stack and has control of all the components – UI, middleware, persistence, transaction. This allows the service to be polyglot, and use the right tool for the right job. For example, if a NoSQL data store can be used if that is more appropriate instead of jamming that data in a SQL database.

Asynchronous Messaging Microservice Design Pattern: While REST design pattern is quite prevalent, and well understood, but it has the limitation of being synchronous, and thus blocking. Asynchrony can be achieved but that is done in an application specific way. Some microservice architectures may elect to use message queues instead of REST request/response because of that."

He goes into greater detail in his post. Check it out if you’re looking for a good primer on microservices design patterns.

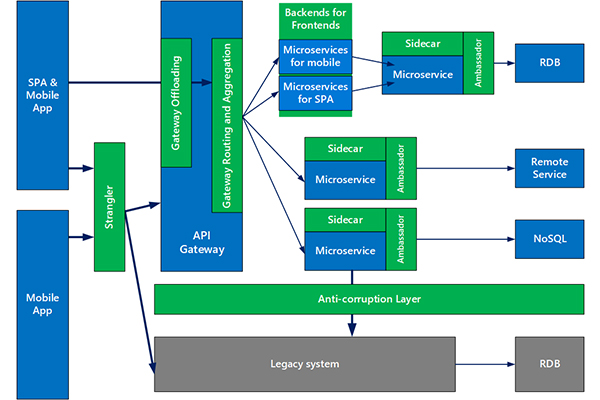

Naturally the Azure team at Microsoft has just published nine new microservices design patterns. Mike Wasson, lead content developer for AzureCAT patterns and practices, describes the new offerings from the Azure team in this recent post.

The AzureCAT patterns & practices team has published nine new design patterns on the Azure Architecture Center. These nine patterns are particularly useful when designing and implementing microservices. The increased interest in microservices within the industry was the motivation for documenting these patterns. For each pattern, we describe the problem, the solution, when to use the pattern, and implementation considerations. Here are the new patterns:

Ambassador can be used to offload common client connectivity tasks such as monitoring, logging, routing, and security (such as TLS) in a language agnostic way.

Anti-corruption layer implements a façade between new and legacy applications, to ensure that the design of a new application is not limited by dependencies on legacy systems.

Backends for Frontends creates separate backend services for different types of clients, such as desktop and mobile. That way, a single backend service doesn't need to handle the conflicting requirements of various client types. This pattern can help keep each microservice simple, by separating client-specific concerns.

Bulkhead isolates critical resources, such as connection pool, memory, and CPU, for each workload or service. By using bulkheads, a single workload (or service) can’t consume all of the resources, starving others. This pattern increases the resiliency of the system by preventing cascading failures caused by one service.

Gateway Aggregation aggregates requests to multiple individual microservices into a single request, reducing chattiness between consumers and services.

Gateway Offloading enables each microservice to offload shared service functionality, such as the use of SSL certificates, to an API gateway.

Gateway Routing routes requests to multiple microservices using a single endpoint, so that consumers don't need to manage many separate endpoints.

Sidecar deploys helper components of an application as a separate container or process to provide isolation and encapsulation.

Strangler supports incremental migration by gradually replacing specific pieces of functionality with new services."

Wasson concludes with his own take on the whys and hows of microservices design patterns. "The goal of microservices is to increase the velocity of application releases, by decomposing the application into small autonomous services that can be deployed independently. A microservices architecture also brings some challenges, and these patterns can help mitigate these challenges."

Posted by Lafe Low on 02/28/20180 comments

Hey there everyone, Lafe here again. And once again, every month or so my fellow Visual Studio Live! blogger Rich Seeley will present a more technical approach to these blog posts. His latest post details some of the clamoring and confusion going on around C#, most notably that C# fans are being left out in the cold when it comes to AI.

What's up with C#?

In a word: Lots.

There's been plenty of news over on https://visualstudiomagazine.com/home.aspx.

Some of the news has been about new features for C#. But one of the big stories is about C# coders being left out of Microsoft’s Artificial Intelligence game. In .NET Coders Clamor for C# Support in Microsoft's CNTK AI Toolkit David Ramel, editor of Visual Studio Magazine, uncovered unrest in posts on the Issues section of the Microsoft Cognitive Toolkit (CNTK) GitHub repository for the open source toolkit for commercial-grade distributed deep learning.

While CNTK supports Python, the current AI darling, as well as BrainScript, .NET developers are wondering why more traditional .NET languages like C#, Visual Basic and F# are not included.

Here's some of what Ramel found in posts on the GitHub site where a developer with the handle nikosdim1 wrote a post titled "C# and .NET support barking," highlighting the lack of C# support:

"Strangely, though, it seems that we have to abandon C#/VB/F# and learn python because CNTK is more supported in this language (OK C++ too but this is not a 'clean' .net language) maybe because other frameworks rely more on Python."

In the opinion of this poster it seems .NET developers are being told not to use C# or Visual Basic or F# because Microsoft doesn’t seem to fully support them.

Another developer posted: "I'm eager to finally work with deep learning on Windows with C# instead of on Linux with Python."

A poster who appeared to be with Microsoft tried to reassure the developers that support for C# and the other traditional languages is in the works but added there is no timeframe.

Microsoft is famously closed mouthed about these issues. But when news breaks, you’ll find it on VisualStudiomagazine.com.

Better news for C# developers is Microsoft’s commitment to speeding updates.

In Microsoft Quickens C# Release Cadence, Unveils v7.1, Ramel told readers: "Mads Torgersen, lead designer for C#, said the team is moving to 'point releases' to keep up with associated tooling -- like Visual Studio -- and the underlying .NET Framework itself that are shipped more frequently. This, he said, brings new features to developers in a trickle so they can take advantage of them sooner."

In January, Amazon Web Services also had good news for C# developers as reported in AWS Cloud Adds .NET Core 2.0 Support for C# Coding of Lambda Functions. AWS announced support for .NET developers using C# to write Lambda functions “while leveraging .NET Core 2.0 libraries.” Lambda is the foundation of Amazon's “serverless computing” service.

To learn more about the latest features in C#, read this Visual Studio magazine article about a presentation at a recent Visual Studio Live! conference with Microsoft's Adam Tuliper. C# developers with an interest in tuples will find a lot here. It's the next best thing to attending Visual Studio Live! where you would have actually had a chance to chat with Tuliper, as is the case with all of our presenters.

If you are really looking for a deep dive into C#, go to Analysing C# code on GitHub with BigQuery by London-based Microsoft MVP Matt Warren. As he explains: "...in this post I am going to be looking at all the C# source code on GitHub and what we can find out from it." He found answers to both controversial and more prosaic C# questions ranging from "Tabs or Spaces?" to "How many lines of code (LOC) are in a typical C# file?" If you're curious about all things C# this is a great read.

Want to keep up with C# happenings and do more than just read about it but possibly make suggestions for feature and bug fixes? Visit GitHub's C# Language Design page, "the official repo for C# language design." It welcomes visitors and explains: "This is where new C# language features are developed, adopted and specified. C# is designed by the C# Language Design Team (LDT) in close coordination with the Roslyn project, which implements the language."

Among the things you'll find on the site are:

- Active C# language feature proposals

- C# language design meetings

- C# language specifications

- Summary of the language version history

For those who want to get involved, the Design Team suggests: "If you discover bugs or deficiencies in the above, please leave an issue to raise them, or even better: a pull request to fix them."

Finally, here's a question where there doesn't seem to be an answer. C# predates Twitter with its famous hashtags. But in the 21st century wouldn’t it be easier to rename the language Csharp since that's what you have to do when you post to Twitter or create a URL on the web?

Posted by Lafe Low on 02/15/20180 comments